- 1 Overview

- 2 Environment Setup

- 3 A note on libraries

- 4 Data Import

- 5 Meta Data

- 6 Defining default

- 7 Removing variables deemed unfit for most modeling

- 8 A note on hypothesis generation vs. hypothesis confirmation

- 9 Exploratory Data Analysis

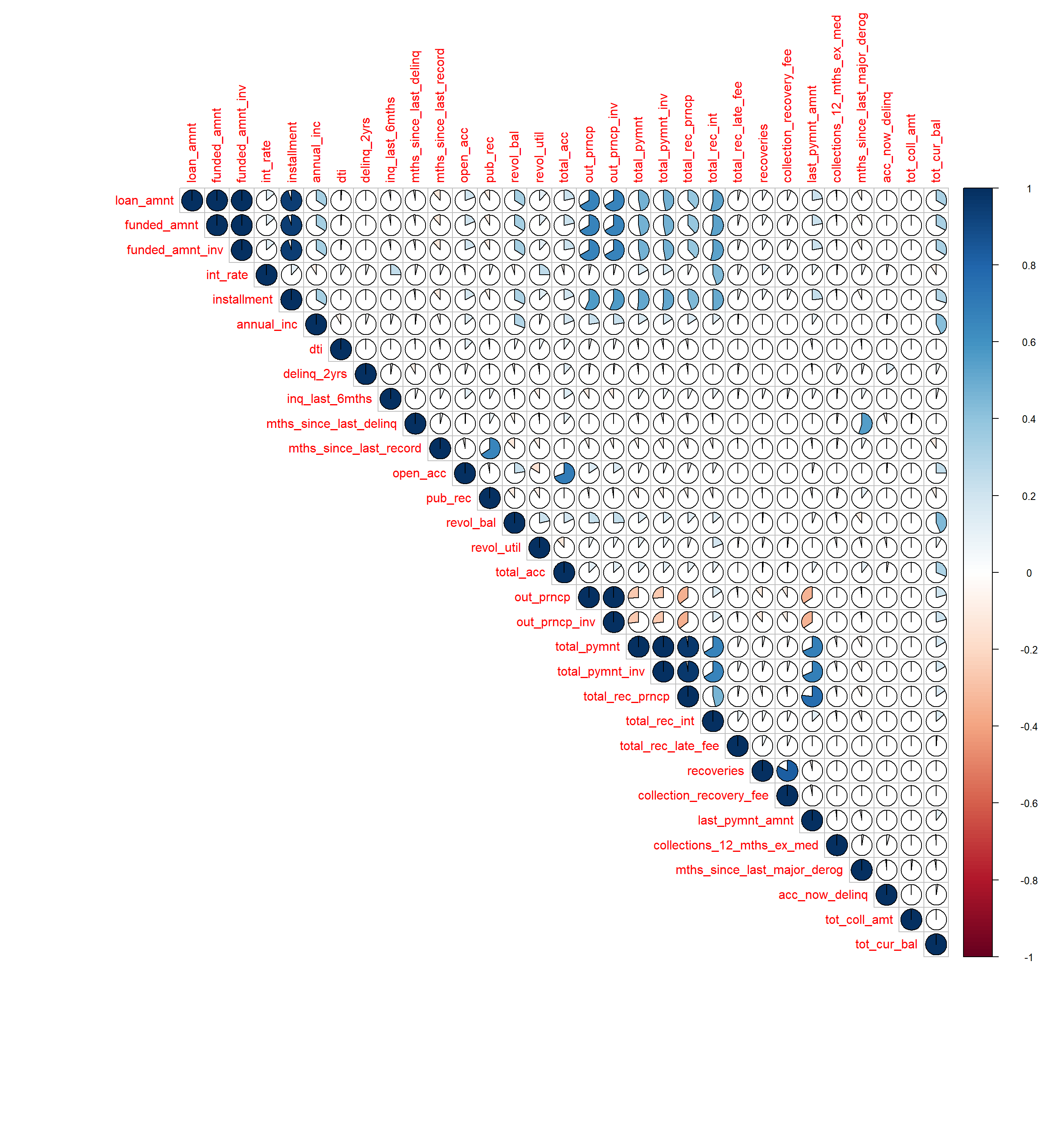

- 10 Correlation

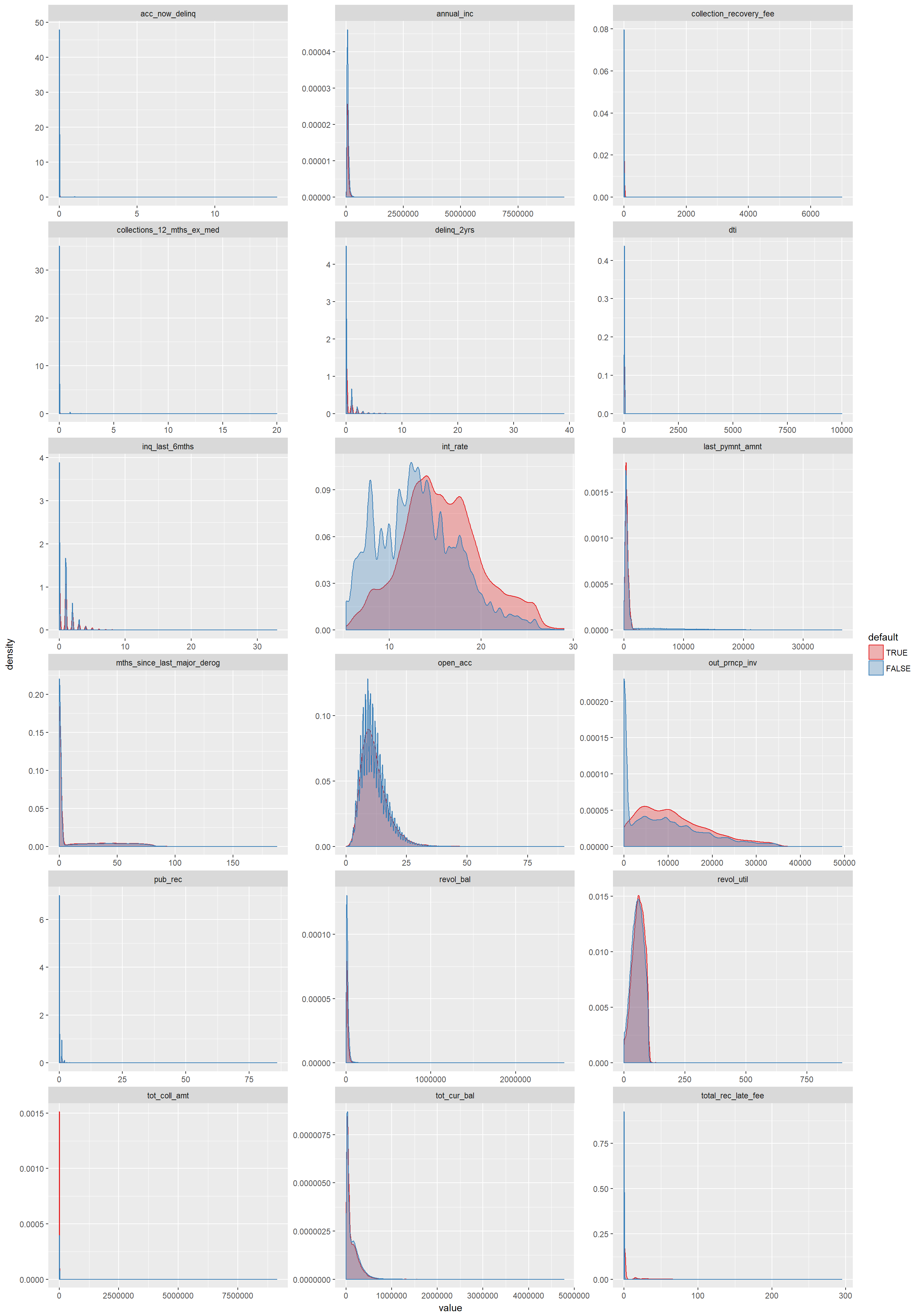

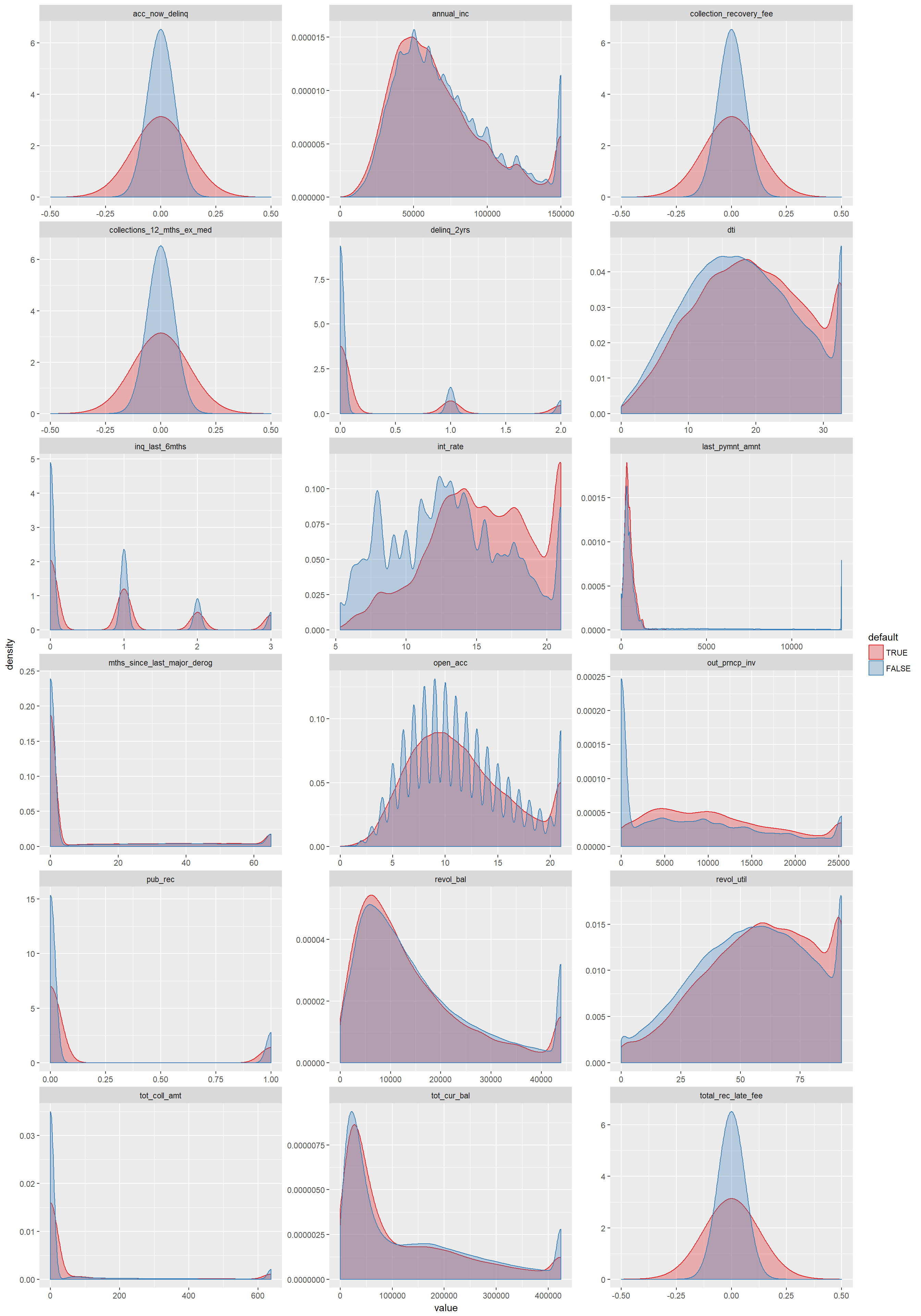

- 11 Summary plots

- 12 Modeling

- 12.1 Model options

- 12.2 Imbalanced data

- 12.3 A note on modeling libraries

- 12.4 Data preparation for modeling

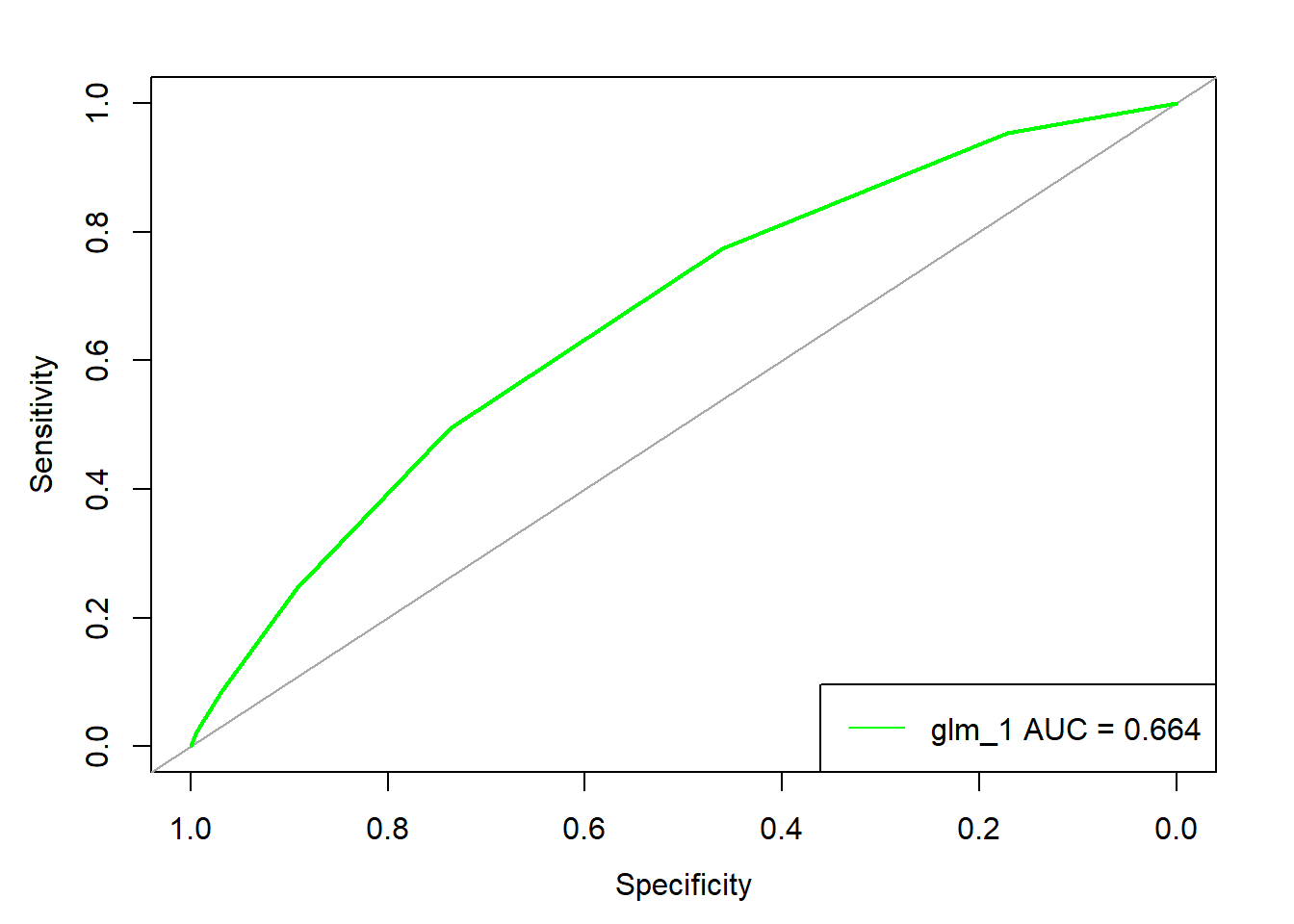

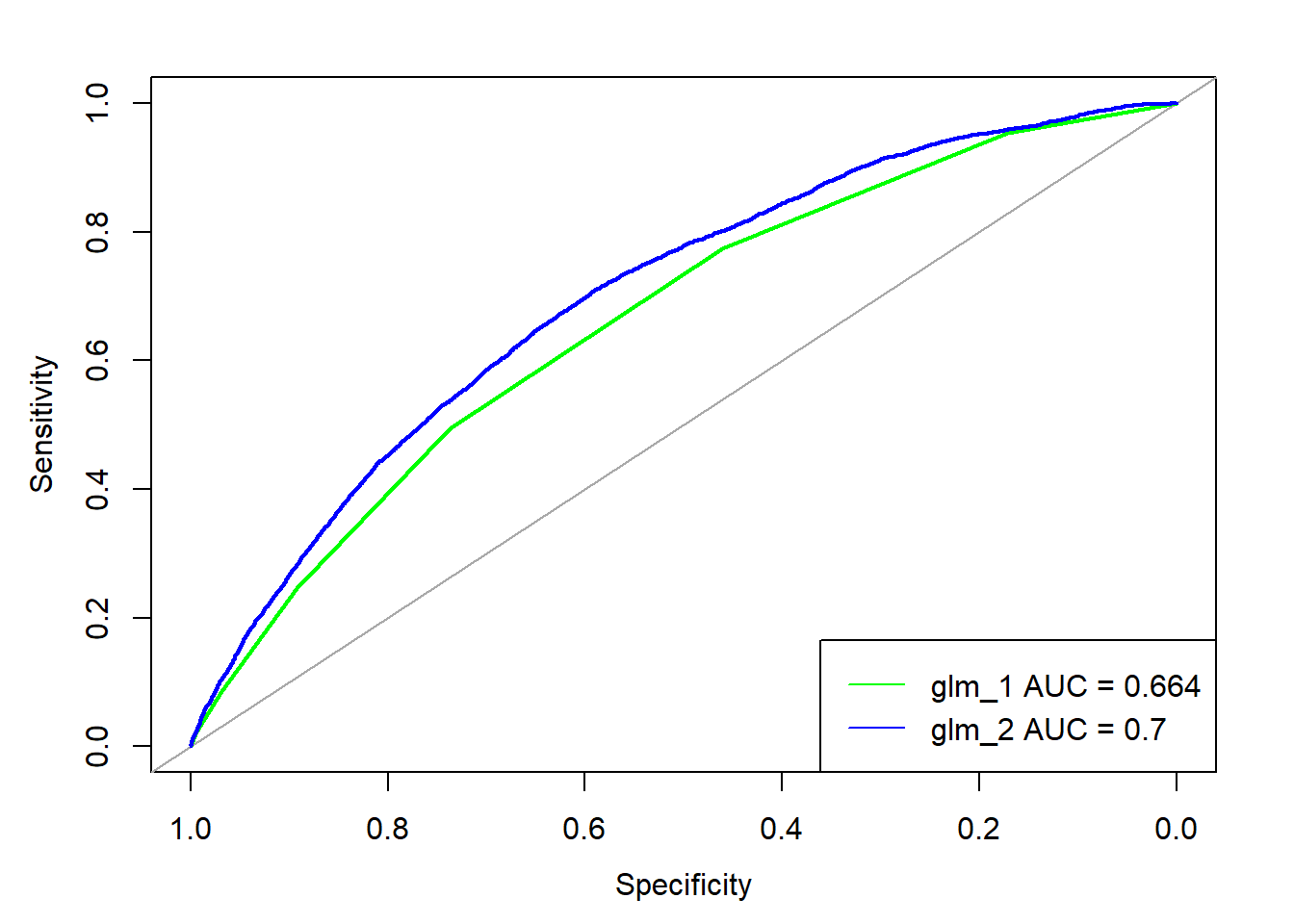

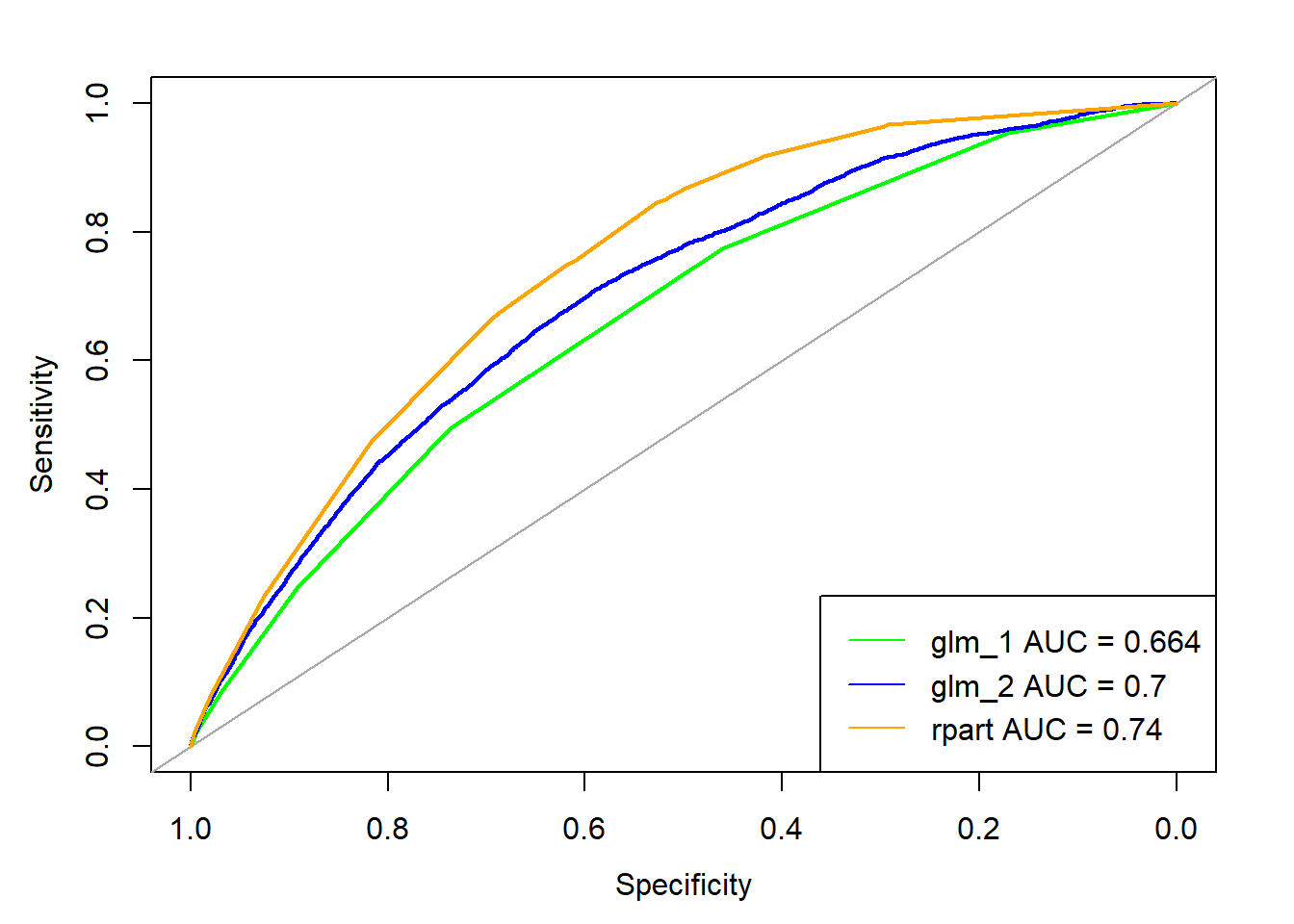

- 12.5 Logistic regression

- 12.6 Model evaluation illustration

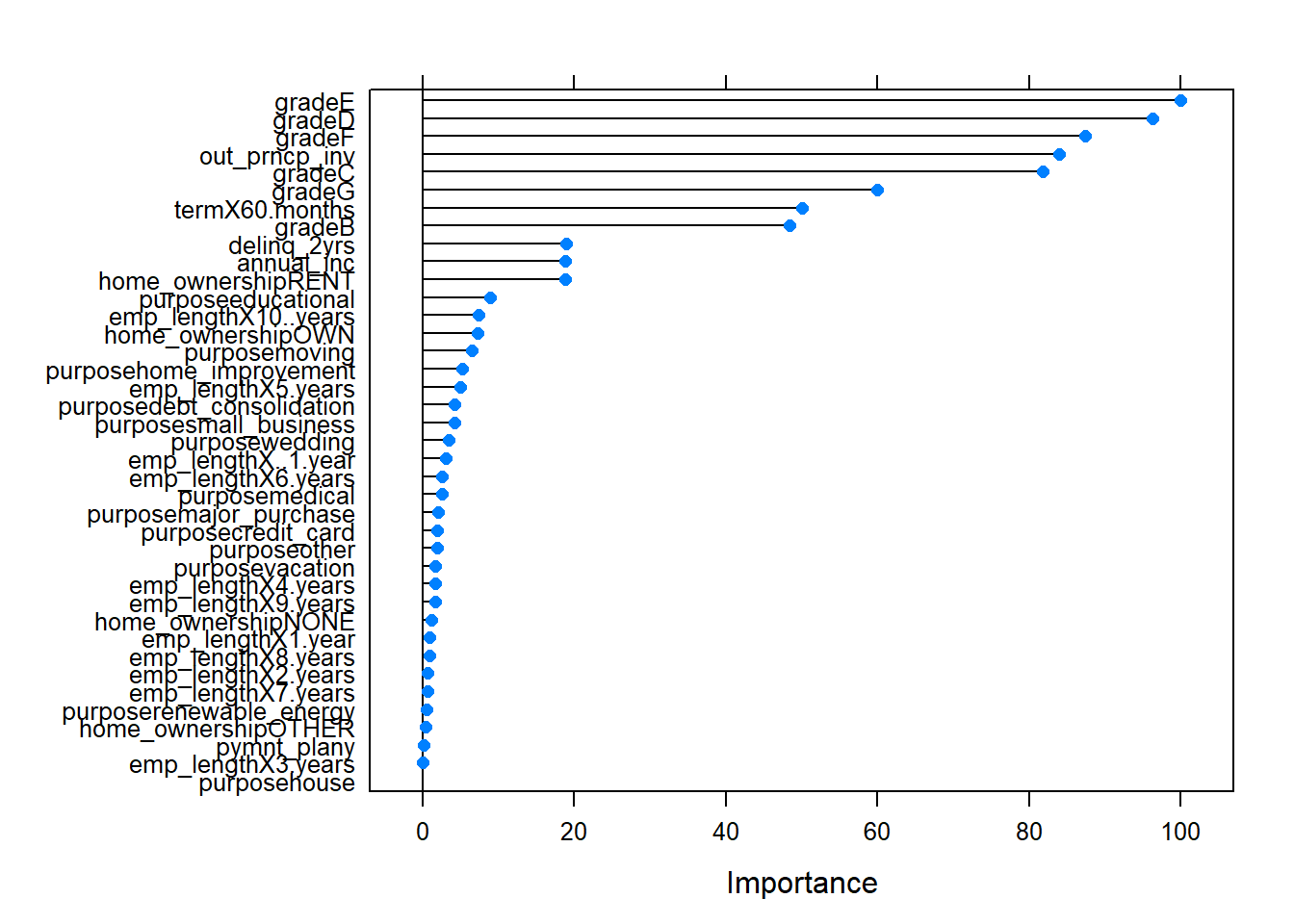

- 12.7 Variable selection

- 12.8 A more complex logistic model with caret

- 12.9 A note on computational efficiency (parallelization via cluster)

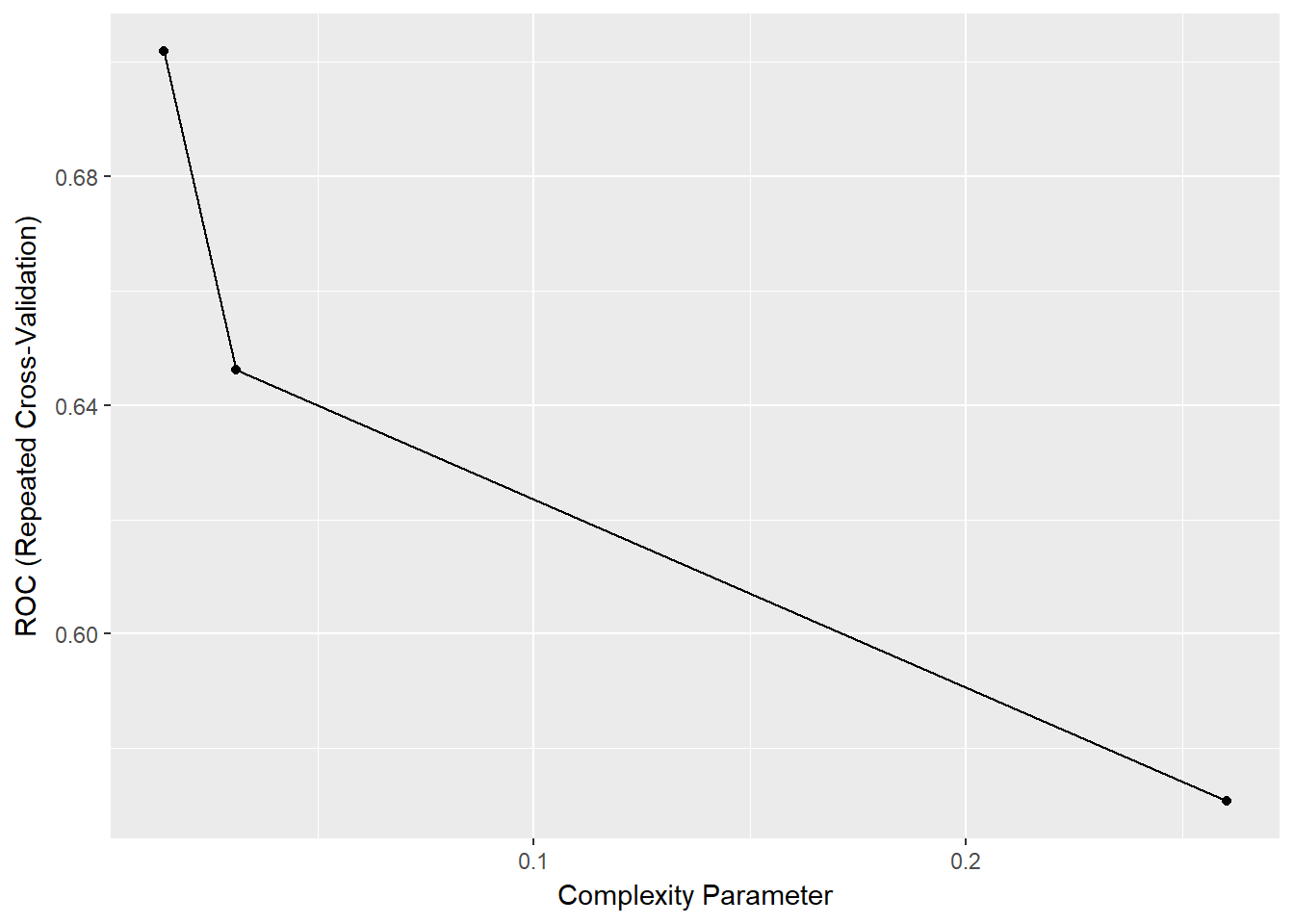

- 12.10 A note on tuning parameters in caret

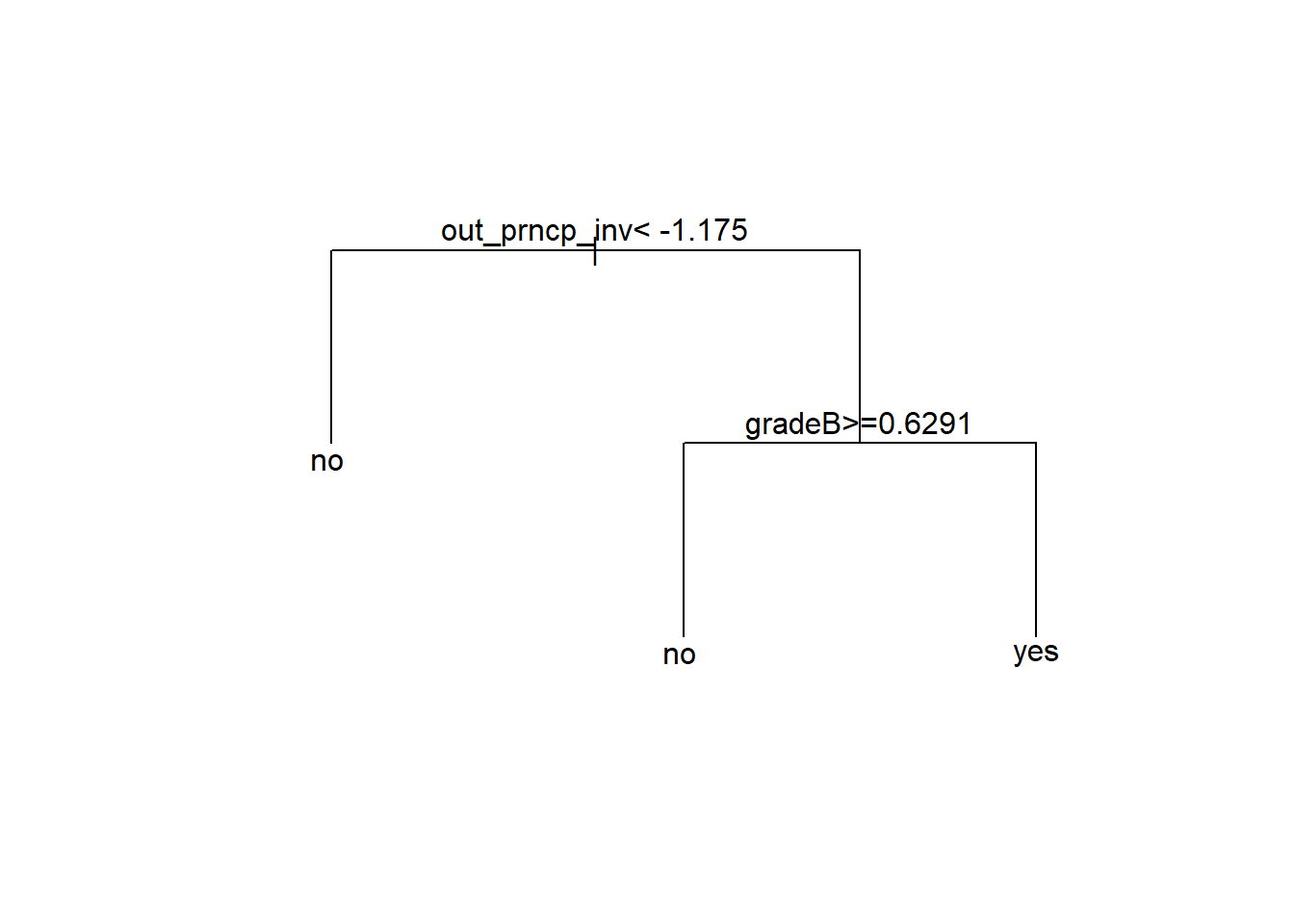

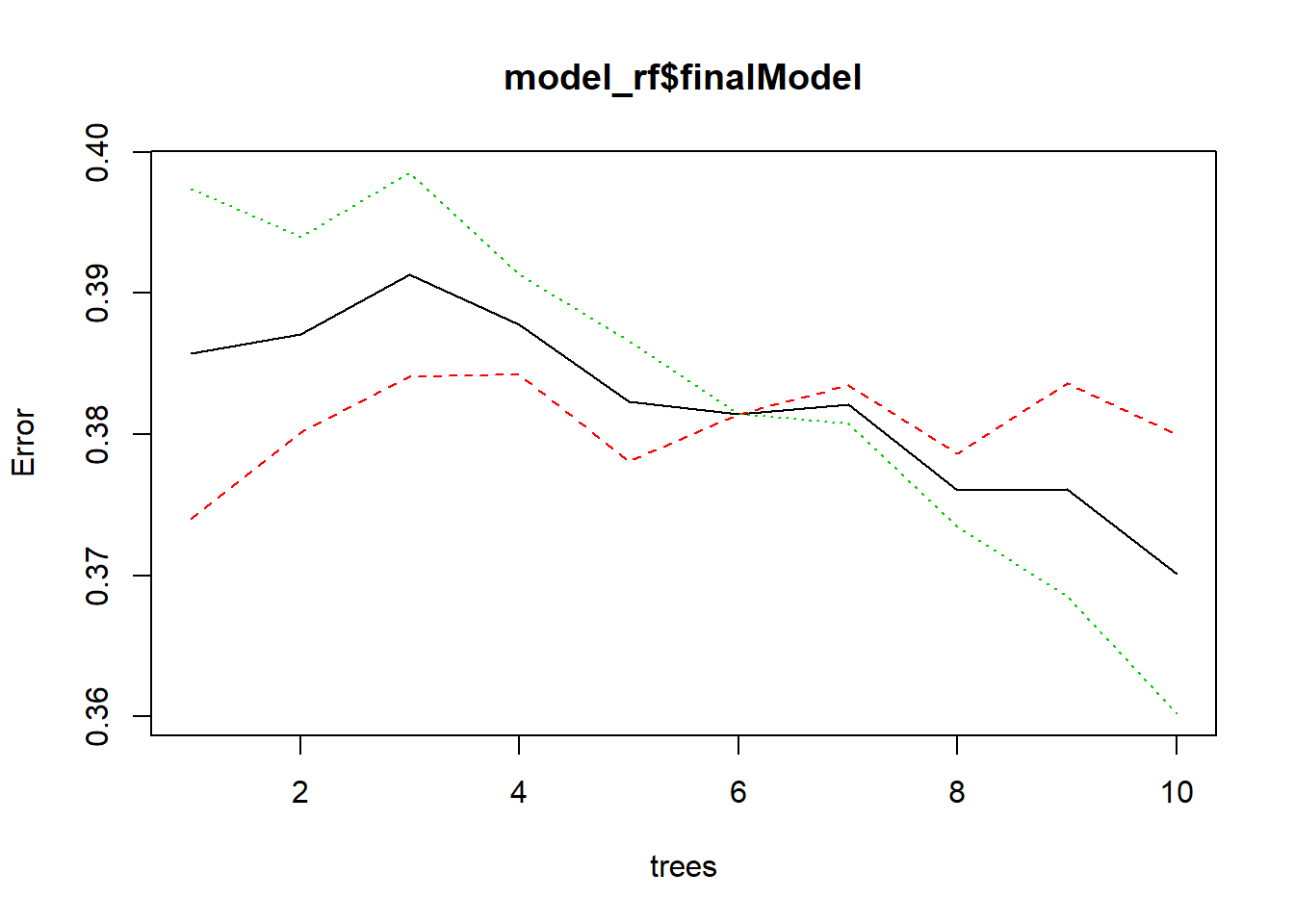

- 12.11 Trees

- 13 Further Resources

1 Overview

A case study of machine learning / modeling in R with credit default data. Data is taken from Kaggle Lending Club Loan Data but is also available publicly at Lending Club Statistics Page. We illustrate the complete workflow from data ingestion, over data wrangling/transformation to exploratory data analysis and finally modeling approaches. Along the way will be helpful discussions on side topics such as training strategy, computational efficiency, R intricacies, and more. Note that the focus is more on methods in R rather than statistical rigour. This is meant to be a reference for an end-to-end data science workflow rather than a serious attempt to achieve best model performance.

1.1 Kaggle Data Description

The files contain complete loan data for all loans issued through 2007-2015, including the current loan status (Current, Late, Fully Paid, etc.) and latest payment information. The file containing loan data through the “present” contains complete loan data for all loans issued through the previous completed calendar quarter. Additional features include credit scores, number of finance inquiries, address including zip codes, and state, and collections among others. The file is a matrix of about 890 thousand observations and 75 variables. A data dictionary is provided in a separate file.

2 Environment Setup

2.1 Libraries

This will automatically install any missing libraries. Make sure to have proper proxy settings. You do not necessarily need to have all of them but only those needed for respective sections (which will be indicated).

# define used libraries

libraries_used <-

c("lazyeval", "readr","plyr" ,"dplyr", "readxl", "ggplot2",

"funModeling", "scales", "tidyverse", "corrplot", "GGally", "caret",

"rpart", "randomForest", "pROC", "gbm", "choroplethr", "choroplethrMaps",

"microbenchmark", "doParallel", "e1071")

# check missing libraries

libraries_missing <-

libraries_used[!(libraries_used %in% installed.packages()[,"Package"])]

# install missing libraries

if(length(libraries_missing)) install.packages(libraries_missing)2.2 Session Info

sessionInfo()## R version 3.4.1 (2017-06-30)

## Platform: x86_64-w64-mingw32/x64 (64-bit)

## Running under: Windows 10 x64 (build 15063)

##

## Matrix products: default

##

## locale:

## [1] LC_COLLATE=English_United States.1252

## [2] LC_CTYPE=English_United States.1252

## [3] LC_MONETARY=English_United States.1252

## [4] LC_NUMERIC=C

## [5] LC_TIME=English_United States.1252

##

## attached base packages:

## [1] methods stats graphics grDevices utils datasets base

##

## loaded via a namespace (and not attached):

## [1] compiler_3.4.1 backports_1.1.0 bookdown_0.5 magrittr_1.5

## [5] rprojroot_1.2 tools_3.4.1 htmltools_0.3.6 yaml_2.1.14

## [9] Rcpp_0.12.12 stringi_1.1.5 rmarkdown_1.6 blogdown_0.1

## [13] knitr_1.17 stringr_1.2.0 digest_0.6.12 evaluate_0.10.13 A note on libraries

There are generally two ways to access functions from R packages (we use package and library interchangeably). Either via a direct access without loading the library, i.e. package::function() or by loading (attaching) the library into workspace (environment) and thus making all its functions available at once. The problem with the first option is that direct access sometimes can lead to issues (unexpected behavior/errors) and makes coding cumbersome. The problem with the second approach is a conflict of function (and other variables) names between two libraries (called masking). A third complication is that some functions require libraries to be loaded (e.g. some instances of caret::train()) and thus attach the library without being explicitly told. Again this may lead to masking of functions and if user is unaware this can lead to nasty problems (conceived bugs) down the road (sometimes libraries will issue a warning upon load, e.g. plyr when dplyr is already loaded). There is no golden way (at least we are not aware of it) and so we tend to do the following

- load major libraries that are used frequently into workspace but pay attention to load succession to avoid unwanted masking

- access rarely used functions directly (being aware that they work and don’t attach anything themselves)

- sometimes use direct access to a fucntion although its library is loaded (either to make clear which library is currently used or because we explicitly need a function that has been masked due to another loaded library)

We will load a few essential libraries here as they are used heavily. Other librraies may only be loaded in respective section. You can also try to follow the analysis without loading many librraies at the beginning but only when needed. Just be aware of the succession to avoid issues.

3.1 Library versions

Also note that often the version of the library used matters (it always matters but what we mean is, it makes a difference) and some libraries are developed more actively than others which may lead to issues. For example, note that library caret is using plyr while the tidyverse of which dplyr (successor of plyr) is part has some new concepts, e.g. the default data frame is a tibble::tibble(). It seems that caret has issues with tibbles, see e.g. “Wrong model type for classification” in regression problems in R-Caret.

library(plyr)

library(tidyverse)## Loading tidyverse: ggplot2

## Loading tidyverse: tibble

## Loading tidyverse: tidyr

## Loading tidyverse: readr

## Loading tidyverse: purrr

## Loading tidyverse: dplyr## Conflicts with tidy packages ----------------------------------------------## arrange(): dplyr, plyr

## compact(): purrr, plyr

## count(): dplyr, plyr

## failwith(): dplyr, plyr

## filter(): dplyr, stats

## id(): dplyr, plyr

## lag(): dplyr, stats

## mutate(): dplyr, plyr

## rename(): dplyr, plyr

## summarise(): dplyr, plyr

## summarize(): dplyr, plyrlibrary(caret)## Loading required package: lattice##

## Attaching package: 'caret'## The following object is masked from 'package:purrr':

##

## lift4 Data Import

Refer to section Overview to see how the data may be acquired.

The loans data can be conveniently read via readr::read_csv(). Note that the function tries to determine the variable type by reading the first 1,000 rows which does not always guarantee correct import especially in cases of missing values at the begining of the file. Other packages treat imports differently, for example data.table::fread() takes a sample of 1,000 rows to determine column types (100 rows from 10 different points) which seems to get more robust results than readr::read_csv(). Both package functions allow the explicit definition of column classes, for readr::read_csv() it is parameter col_types and for data.table::fread() it is parameter colClasses. Alternatively, readr::read_csv() offers the parameter guess_max that allows increasing the number of rows being guessed similar to SAS import procedure parameter guessingrows. Naturally, import time increases if more rows are guessed. For details on readr including comparisons against Base R and data.table::fread() see readr.

path <- "D:/data/lending_club"

loans <- readr::read_csv(paste0(path, "./loan.csv"))The meta data comes in an Excel file and needs to be parsed via a special library, in this case we use readxl.

library(readxl)

# Load the Excel workbook

excel_file = paste0("./LendingClubDataDictionary.xlsx")

# see available tabs

excel_sheets(paste0(path, excel_file))## [1] "LoanStats" "browseNotes" "RejectStats"# Read in the first worksheet

meta_loan_stats = read_excel(paste0(path, excel_file), sheet = "LoanStats")

meta_browse_notes = read_excel(paste0(path, excel_file), sheet = "browseNotes")

meta_reject_stats = read_excel(paste0(path, excel_file), sheet = "RejectStats")5 Meta Data

Let’s have a look at the meta data in more detail. First, which variables are present in loan data set and what is their type. Then check meta data information and finally compare the two to see if anything is missing.

The usual approach may be to use base::str() function to get a summary of the data structure. However, it may be useful to quantify the “information power” of different metrics and dimensions by looking at the ratio of zeros and missing values to overall observations. This will not always reveal the truth (as there may be variables that are only populated if certain conditions apply) but it still gives some indication. The funModeling package offers the function funModeling::df_status() for that. It does not scale very well and has quite a few dependencies (so a direct call is preferred over a full library load) but it suits the purpose for this data. Unfortunately, it does not return the number of rows and columns. The data has 887379 observations (rows) and 74 variables (columns).

We will require the meta data at a later stage so we assign it to a variable. The function funModeling::df_status() has parameter print_results = TRUE set by default which means the data will be assigned and printed at the same time.

meta_loans <- funModeling::df_status(loans, print_results = FALSE)

knitr::kable(meta_loans)| variable | q_zeros | p_zeros | q_na | p_na | q_inf | p_inf | type | unique |

|---|---|---|---|---|---|---|---|---|

| id | 0 | 0.00 | 0 | 0.00 | 0 | 0 | integer | 887379 |

| member_id | 0 | 0.00 | 0 | 0.00 | 0 | 0 | integer | 887379 |

| loan_amnt | 0 | 0.00 | 0 | 0.00 | 0 | 0 | numeric | 1372 |

| funded_amnt | 0 | 0.00 | 0 | 0.00 | 0 | 0 | numeric | 1372 |

| funded_amnt_inv | 233 | 0.03 | 0 | 0.00 | 0 | 0 | numeric | 9856 |

| term | 0 | 0.00 | 0 | 0.00 | 0 | 0 | character | 2 |

| int_rate | 0 | 0.00 | 0 | 0.00 | 0 | 0 | numeric | 542 |

| installment | 0 | 0.00 | 0 | 0.00 | 0 | 0 | numeric | 68711 |

| grade | 0 | 0.00 | 0 | 0.00 | 0 | 0 | character | 7 |

| sub_grade | 0 | 0.00 | 0 | 0.00 | 0 | 0 | character | 35 |

| emp_title | 0 | 0.00 | 51457 | 5.80 | 0 | 0 | character | 289148 |

| emp_length | 0 | 0.00 | 0 | 0.00 | 0 | 0 | character | 12 |

| home_ownership | 0 | 0.00 | 0 | 0.00 | 0 | 0 | character | 6 |

| annual_inc | 2 | 0.00 | 4 | 0.00 | 0 | 0 | numeric | 49384 |

| verification_status | 0 | 0.00 | 0 | 0.00 | 0 | 0 | character | 3 |

| issue_d | 0 | 0.00 | 0 | 0.00 | 0 | 0 | character | 103 |

| loan_status | 0 | 0.00 | 0 | 0.00 | 0 | 0 | character | 10 |

| pymnt_plan | 0 | 0.00 | 0 | 0.00 | 0 | 0 | character | 2 |

| url | 0 | 0.00 | 0 | 0.00 | 0 | 0 | character | 887379 |

| desc | 0 | 0.00 | 761597 | 85.83 | 0 | 0 | character | 124453 |

| purpose | 0 | 0.00 | 0 | 0.00 | 0 | 0 | character | 14 |

| title | 0 | 0.00 | 151 | 0.02 | 0 | 0 | character | 61452 |

| zip_code | 0 | 0.00 | 0 | 0.00 | 0 | 0 | character | 935 |

| addr_state | 0 | 0.00 | 0 | 0.00 | 0 | 0 | character | 51 |

| dti | 451 | 0.05 | 0 | 0.00 | 0 | 0 | numeric | 4086 |

| delinq_2yrs | 716961 | 80.80 | 29 | 0.00 | 0 | 0 | numeric | 29 |

| earliest_cr_line | 0 | 0.00 | 29 | 0.00 | 0 | 0 | character | 697 |

| inq_last_6mths | 497905 | 56.11 | 29 | 0.00 | 0 | 0 | numeric | 28 |

| mths_since_last_delinq | 1723 | 0.19 | 454312 | 51.20 | 0 | 0 | numeric | 155 |

| mths_since_last_record | 1283 | 0.14 | 750326 | 84.56 | 0 | 0 | numeric | 123 |

| open_acc | 7 | 0.00 | 29 | 0.00 | 0 | 0 | numeric | 77 |

| pub_rec | 751572 | 84.70 | 29 | 0.00 | 0 | 0 | numeric | 32 |

| revol_bal | 3402 | 0.38 | 0 | 0.00 | 0 | 0 | numeric | 73740 |

| revol_util | 3540 | 0.40 | 502 | 0.06 | 0 | 0 | numeric | 1356 |

| total_acc | 0 | 0.00 | 29 | 0.00 | 0 | 0 | numeric | 135 |

| initial_list_status | 0 | 0.00 | 0 | 0.00 | 0 | 0 | character | 2 |

| out_prncp | 255798 | 28.83 | 0 | 0.00 | 0 | 0 | numeric | 248332 |

| out_prncp_inv | 255798 | 28.83 | 0 | 0.00 | 0 | 0 | numeric | 266244 |

| total_pymnt | 17759 | 2.00 | 0 | 0.00 | 0 | 0 | numeric | 506726 |

| total_pymnt_inv | 18037 | 2.03 | 0 | 0.00 | 0 | 0 | numeric | 506616 |

| total_rec_prncp | 18145 | 2.04 | 0 | 0.00 | 0 | 0 | numeric | 260227 |

| total_rec_int | 18214 | 2.05 | 0 | 0.00 | 0 | 0 | numeric | 324635 |

| total_rec_late_fee | 874862 | 98.59 | 0 | 0.00 | 0 | 0 | numeric | 6181 |

| recoveries | 862702 | 97.22 | 0 | 0.00 | 0 | 0 | numeric | 23055 |

| collection_recovery_fee | 863872 | 97.35 | 0 | 0.00 | 0 | 0 | numeric | 20708 |

| last_pymnt_d | 0 | 0.00 | 17659 | 1.99 | 0 | 0 | character | 98 |

| last_pymnt_amnt | 17673 | 1.99 | 0 | 0.00 | 0 | 0 | numeric | 232451 |

| next_pymnt_d | 0 | 0.00 | 252971 | 28.51 | 0 | 0 | character | 100 |

| last_credit_pull_d | 0 | 0.00 | 53 | 0.01 | 0 | 0 | character | 103 |

| collections_12_mths_ex_med | 875553 | 98.67 | 145 | 0.02 | 0 | 0 | numeric | 12 |

| mths_since_last_major_derog | 0 | 0.00 | 665676 | 75.02 | 0 | 0 | character | 168 |

| policy_code | 0 | 0.00 | 0 | 0.00 | 0 | 0 | numeric | 1 |

| application_type | 0 | 0.00 | 0 | 0.00 | 0 | 0 | character | 2 |

| annual_inc_joint | 0 | 0.00 | 886868 | 99.94 | 0 | 0 | character | 308 |

| dti_joint | 0 | 0.00 | 886870 | 99.94 | 0 | 0 | character | 449 |

| verification_status_joint | 0 | 0.00 | 886868 | 99.94 | 0 | 0 | character | 3 |

| acc_now_delinq | 883236 | 99.53 | 29 | 0.00 | 0 | 0 | numeric | 8 |

| tot_coll_amt | 0 | 0.00 | 70276 | 7.92 | 0 | 0 | character | 10325 |

| tot_cur_bal | 0 | 0.00 | 70276 | 7.92 | 0 | 0 | character | 327342 |

| open_acc_6m | 0 | 0.00 | 866007 | 97.59 | 0 | 0 | character | 13 |

| open_il_6m | 0 | 0.00 | 866007 | 97.59 | 0 | 0 | character | 35 |

| open_il_12m | 0 | 0.00 | 866007 | 97.59 | 0 | 0 | character | 12 |

| open_il_24m | 0 | 0.00 | 866007 | 97.59 | 0 | 0 | character | 17 |

| mths_since_rcnt_il | 0 | 0.00 | 866569 | 97.65 | 0 | 0 | character | 201 |

| total_bal_il | 0 | 0.00 | 866007 | 97.59 | 0 | 0 | character | 17030 |

| il_util | 0 | 0.00 | 868762 | 97.90 | 0 | 0 | character | 1272 |

| open_rv_12m | 0 | 0.00 | 866007 | 97.59 | 0 | 0 | character | 18 |

| open_rv_24m | 0 | 0.00 | 866007 | 97.59 | 0 | 0 | character | 28 |

| max_bal_bc | 0 | 0.00 | 866007 | 97.59 | 0 | 0 | character | 10707 |

| all_util | 0 | 0.00 | 866007 | 97.59 | 0 | 0 | character | 1128 |

| total_rev_hi_lim | 0 | 0.00 | 70276 | 7.92 | 0 | 0 | character | 21251 |

| inq_fi | 0 | 0.00 | 866007 | 97.59 | 0 | 0 | character | 18 |

| total_cu_tl | 0 | 0.00 | 866007 | 97.59 | 0 | 0 | character | 33 |

| inq_last_12m | 0 | 0.00 | 866007 | 97.59 | 0 | 0 | character | 29 |

It seems the data has two unique identifiers id and member_id. There is no variable with 100% missing or zero values i.e. information power of zero. There are a few which have a high ratio of NAs so the meta description needs to be checked whether this is expected. There are also variables which have only one unique value, e.g. policy_code. Again, the meta description should be checked to see the rationale but such dimensions are not useful for any analysis or model buidling.

Our meta table also contains the absolute number of unique values which is helpful for plotting (for attributes that is). Another interesting ratio to look at is that of unique values over all values for any attribute. A high ratio would indicate that this is probably a “free” field, i.e. no particular constraints are put on its values (except key variables of course but in case of uniqueness their ratio should be one as is the case with id and member_id). When looking into correlations, these high ratio fields will have low correlation with other fields but they may still be useful e.g. because they have “direction” information (e.g. the direction of an effect) or, in the case of strings, may be useful for text analytics. We thus add a respective variable to the meta data. For improved readability, we use the scales::percent() function to convert output to percent.

meta_loans <-

meta_loans %>%

mutate(uniq_rat = unique / nrow(loans))

meta_loans %>%

select(variable, unique, uniq_rat) %>%

mutate(unique = unique, uniq_rat = scales::percent(uniq_rat)) %>%

knitr::kable()| variable | unique | uniq_rat |

|---|---|---|

| id | 887379 | 100.0% |

| member_id | 887379 | 100.0% |

| loan_amnt | 1372 | 0.2% |

| funded_amnt | 1372 | 0.2% |

| funded_amnt_inv | 9856 | 1.1% |

| term | 2 | 0.0% |

| int_rate | 542 | 0.1% |

| installment | 68711 | 7.7% |

| grade | 7 | 0.0% |

| sub_grade | 35 | 0.0% |

| emp_title | 289148 | 32.6% |

| emp_length | 12 | 0.0% |

| home_ownership | 6 | 0.0% |

| annual_inc | 49384 | 5.6% |

| verification_status | 3 | 0.0% |

| issue_d | 103 | 0.0% |

| loan_status | 10 | 0.0% |

| pymnt_plan | 2 | 0.0% |

| url | 887379 | 100.0% |

| desc | 124453 | 14.0% |

| purpose | 14 | 0.0% |

| title | 61452 | 6.9% |

| zip_code | 935 | 0.1% |

| addr_state | 51 | 0.0% |

| dti | 4086 | 0.5% |

| delinq_2yrs | 29 | 0.0% |

| earliest_cr_line | 697 | 0.1% |

| inq_last_6mths | 28 | 0.0% |

| mths_since_last_delinq | 155 | 0.0% |

| mths_since_last_record | 123 | 0.0% |

| open_acc | 77 | 0.0% |

| pub_rec | 32 | 0.0% |

| revol_bal | 73740 | 8.3% |

| revol_util | 1356 | 0.2% |

| total_acc | 135 | 0.0% |

| initial_list_status | 2 | 0.0% |

| out_prncp | 248332 | 28.0% |

| out_prncp_inv | 266244 | 30.0% |

| total_pymnt | 506726 | 57.1% |

| total_pymnt_inv | 506616 | 57.1% |

| total_rec_prncp | 260227 | 29.3% |

| total_rec_int | 324635 | 36.6% |

| total_rec_late_fee | 6181 | 0.7% |

| recoveries | 23055 | 2.6% |

| collection_recovery_fee | 20708 | 2.3% |

| last_pymnt_d | 98 | 0.0% |

| last_pymnt_amnt | 232451 | 26.2% |

| next_pymnt_d | 100 | 0.0% |

| last_credit_pull_d | 103 | 0.0% |

| collections_12_mths_ex_med | 12 | 0.0% |

| mths_since_last_major_derog | 168 | 0.0% |

| policy_code | 1 | 0.0% |

| application_type | 2 | 0.0% |

| annual_inc_joint | 308 | 0.0% |

| dti_joint | 449 | 0.1% |

| verification_status_joint | 3 | 0.0% |

| acc_now_delinq | 8 | 0.0% |

| tot_coll_amt | 10325 | 1.2% |

| tot_cur_bal | 327342 | 36.9% |

| open_acc_6m | 13 | 0.0% |

| open_il_6m | 35 | 0.0% |

| open_il_12m | 12 | 0.0% |

| open_il_24m | 17 | 0.0% |

| mths_since_rcnt_il | 201 | 0.0% |

| total_bal_il | 17030 | 1.9% |

| il_util | 1272 | 0.1% |

| open_rv_12m | 18 | 0.0% |

| open_rv_24m | 28 | 0.0% |

| max_bal_bc | 10707 | 1.2% |

| all_util | 1128 | 0.1% |

| total_rev_hi_lim | 21251 | 2.4% |

| inq_fi | 18 | 0.0% |

| total_cu_tl | 33 | 0.0% |

| inq_last_12m | 29 | 0.0% |

An attribute like emp_title has over 30% unique values which makes it a poor candidate for modeling as it seems borrowers are free to describe their notion of employment title. A sophisticated model, however, may even take advantage of the data by checking the strings for “indicator words” that may be associated with an honest or a dishonest (credible / non-credible) candidate.

5.1 Data Transformation

We should also check the variable types. As mentioned in section Data Import the import function uses heuristics to guess the data types and these may not always work.

- annual_inc_joint = character but should be numeric

- dates are read as character and need to be transformed

First let’s transform character to numeric. We make use of dplyr::mutate_at() function and provide a vector of columns to be mutated (transformed). In general, when using libraries from the tidyverse (these libraries are mainly authored by Hadley Wickham and other RStudio people), most functions offer a standard version as opposed to an NSE (non-standard evaluation) version which can take character values as variable names. These functions usually have a trailing underscore, e.g. dplyr::select_() as compared to the non-standard evaluation function dplyr::select(). For details, see the dplyr vignette on Non-standard evaluation. However, note that the tidyverse libraries are still changing a fair amount so best to cross-check whether used function is the most efficient one for given task or if there is a new one introduced in the mean time.

As opposed to packages like data.table tidyverse packages do not alter their input in place so we have to re-assign the result to the original object. We could also create a new object but this is memory-inefficient (in fact even the re-assignment to the original object creates a temporary copy). For more details on memory management, see chapter Memory in Hadley Wickham’s book “Advanced R”.

chr_to_num_vars <-

c("annual_inc_joint", "mths_since_last_major_derog", "open_acc_6m",

"open_il_6m", "open_il_12m", "open_il_24m", "mths_since_rcnt_il",

"total_bal_il", "il_util", "open_rv_12m", "open_rv_24m",

"max_bal_bc", "all_util", "total_rev_hi_lim", "total_cu_tl",

"inq_last_12m", "dti_joint", "inq_fi", "tot_cur_bal", "tot_coll_amt")

loans <-

loans %>%

mutate_at(.funs = funs(as.numeric), .vars = chr_to_num_vars)Let’s have a look at the date variables to see how they need to be transformed.

chr_to_date_vars <-

c("issue_d", "last_pymnt_d", "last_credit_pull_d",

"next_pymnt_d", "earliest_cr_line", "next_pymnt_d")

loans %>%

select_(.dots = chr_to_date_vars) %>%

str()## Classes 'tbl_df', 'tbl' and 'data.frame': 887379 obs. of 5 variables:

## $ issue_d : chr "Dec-2011" "Dec-2011" "Dec-2011" "Dec-2011" ...

## $ last_pymnt_d : chr "Jan-2015" "Apr-2013" "Jun-2014" "Jan-2015" ...

## $ last_credit_pull_d: chr "Jan-2016" "Sep-2013" "Jan-2016" "Jan-2015" ...

## $ next_pymnt_d : chr NA NA NA NA ...

## $ earliest_cr_line : chr "Jan-1985" "Apr-1999" "Nov-2001" "Feb-1996" ...head(unique(loans$next_pymnt_d))## [1] NA "Feb-2016" "Jan-2016" "Sep-2013" "Feb-2014" "May-2014"for (i in chr_to_date_vars){

print(head(unique(loans[, i])))

}## # A tibble: 6 x 1

## issue_d

## <chr>

## 1 Dec-2011

## 2 Nov-2011

## 3 Oct-2011

## 4 Sep-2011

## 5 Aug-2011

## 6 Jul-2011

## # A tibble: 6 x 1

## last_pymnt_d

## <chr>

## 1 Jan-2015

## 2 Apr-2013

## 3 Jun-2014

## 4 Jan-2016

## 5 Apr-2012

## 6 Nov-2012

## # A tibble: 6 x 1

## last_credit_pull_d

## <chr>

## 1 Jan-2016

## 2 Sep-2013

## 3 Jan-2015

## 4 Sep-2015

## 5 Dec-2014

## 6 Aug-2012

## # A tibble: 6 x 1

## next_pymnt_d

## <chr>

## 1 <NA>

## 2 Feb-2016

## 3 Jan-2016

## 4 Sep-2013

## 5 Feb-2014

## 6 May-2014

## # A tibble: 6 x 1

## earliest_cr_line

## <chr>

## 1 Jan-1985

## 2 Apr-1999

## 3 Nov-2001

## 4 Feb-1996

## 5 Jan-1996

## 6 Nov-2004

## # A tibble: 6 x 1

## next_pymnt_d

## <chr>

## 1 <NA>

## 2 Feb-2016

## 3 Jan-2016

## 4 Sep-2013

## 5 Feb-2014

## 6 May-2014It seems the date format is consistent and follows month-year convention. We can use the base::as.Date() function to convert this to a date format. The function requires a day as well so we simply add the 1st of each month to the existing character string via pasting together strings using base::paste0(). Note that base::paste0("bla", NA) will not return NA but the concatenated string (here: blaNA). Conveniently, base::as.Date() will return NA so we can leave it at that. Alternatively, we could include an exception handler for NA values to explicitly handle those because we saw previously that some date variables include NA values. One way to approach that would be to wrap the date conversion function into a base::ifelse() call as so ifelse(is.na(x), NA, some_date_function(). However, it seems that base::ifelse() is dropping the date class that we just created with our date function, for details, see e.g. How to prevent ifelse from turning Date objects into numeric objects. As we do not want to deal with these issues at this moment, we simply go with the default behavior of base::as.Date() as it returns NA in cases of bad input anyway.

Let’s also return the NA values of the input to remind ourselves of the number. It should coincide with the number of NA after we have converted / transformed the date variables.

meta_loans %>%

select(variable, q_na) %>%

filter(variable %in% chr_to_date_vars)## variable q_na

## 1 issue_d 0

## 2 earliest_cr_line 29

## 3 last_pymnt_d 17659

## 4 next_pymnt_d 252971

## 5 last_credit_pull_d 53Finally, this is how our custom date conversion function will look like, we call it convert_date() and it will take the string value to be converted as input. We define the date format by following the function conventions of base::as.Date(), for details see ?base::as.Date. We could have also used an anonymous function directly in the dplyr::mutate_at() call but the creation of a specific function seemed appropriate as we may use it several times.

convert_date <- function(x){

as.Date(paste0("01-", x), format = "%d-%b-%Y")

}

loans <-

loans %>%

mutate_at(.funs = funs(convert_date), .vars = chr_to_date_vars)num_vars <-

loans %>%

sapply(is.numeric) %>%

which() %>%

names()

meta_loans %>%

select(variable, p_zeros, p_na, unique) %>%

filter_(~ variable %in% num_vars) %>%

knitr::kable()| variable | p_zeros | p_na | unique |

|---|---|---|---|

| id | 0.00 | 0.00 | 887379 |

| member_id | 0.00 | 0.00 | 887379 |

| loan_amnt | 0.00 | 0.00 | 1372 |

| funded_amnt | 0.00 | 0.00 | 1372 |

| funded_amnt_inv | 0.03 | 0.00 | 9856 |

| int_rate | 0.00 | 0.00 | 542 |

| installment | 0.00 | 0.00 | 68711 |

| annual_inc | 0.00 | 0.00 | 49384 |

| dti | 0.05 | 0.00 | 4086 |

| delinq_2yrs | 80.80 | 0.00 | 29 |

| inq_last_6mths | 56.11 | 0.00 | 28 |

| mths_since_last_delinq | 0.19 | 51.20 | 155 |

| mths_since_last_record | 0.14 | 84.56 | 123 |

| open_acc | 0.00 | 0.00 | 77 |

| pub_rec | 84.70 | 0.00 | 32 |

| revol_bal | 0.38 | 0.00 | 73740 |

| revol_util | 0.40 | 0.06 | 1356 |

| total_acc | 0.00 | 0.00 | 135 |

| out_prncp | 28.83 | 0.00 | 248332 |

| out_prncp_inv | 28.83 | 0.00 | 266244 |

| total_pymnt | 2.00 | 0.00 | 506726 |

| total_pymnt_inv | 2.03 | 0.00 | 506616 |

| total_rec_prncp | 2.04 | 0.00 | 260227 |

| total_rec_int | 2.05 | 0.00 | 324635 |

| total_rec_late_fee | 98.59 | 0.00 | 6181 |

| recoveries | 97.22 | 0.00 | 23055 |

| collection_recovery_fee | 97.35 | 0.00 | 20708 |

| last_pymnt_amnt | 1.99 | 0.00 | 232451 |

| collections_12_mths_ex_med | 98.67 | 0.02 | 12 |

| mths_since_last_major_derog | 0.00 | 75.02 | 168 |

| policy_code | 0.00 | 0.00 | 1 |

| annual_inc_joint | 0.00 | 99.94 | 308 |

| dti_joint | 0.00 | 99.94 | 449 |

| acc_now_delinq | 99.53 | 0.00 | 8 |

| tot_coll_amt | 0.00 | 7.92 | 10325 |

| tot_cur_bal | 0.00 | 7.92 | 327342 |

| open_acc_6m | 0.00 | 97.59 | 13 |

| open_il_6m | 0.00 | 97.59 | 35 |

| open_il_12m | 0.00 | 97.59 | 12 |

| open_il_24m | 0.00 | 97.59 | 17 |

| mths_since_rcnt_il | 0.00 | 97.65 | 201 |

| total_bal_il | 0.00 | 97.59 | 17030 |

| il_util | 0.00 | 97.90 | 1272 |

| open_rv_12m | 0.00 | 97.59 | 18 |

| open_rv_24m | 0.00 | 97.59 | 28 |

| max_bal_bc | 0.00 | 97.59 | 10707 |

| all_util | 0.00 | 97.59 | 1128 |

| total_rev_hi_lim | 0.00 | 7.92 | 21251 |

| inq_fi | 0.00 | 97.59 | 18 |

| total_cu_tl | 0.00 | 97.59 | 33 |

| inq_last_12m | 0.00 | 97.59 | 29 |

We also see that variables mths_since_last_delinq, mths_since_last_record, mths_since_last_major_derog, dti_joint and annual_inc_joint have a large share of NA values. If we think about this in more detail, it may be reasonable to assume that NA values for the variables mths_since_last_delinq, mths_since_last_record and mths_since_last_major_derog actually indicate that there was no event/record of any missed payment so there cannot be any time value. Analogously, a missing value for annual_inc_joint and dti_joint may simply indicate that it is a single borrower or the partner has no income. Thus, the first three variables actually carry valuable information that may be lost if we ignored it. We will thus replace the missing values with zeros to make them available for modeling. It should be noted though that a zero time could indicate an event that is just happening so we have to document our assumptions carefully.

na_to_zero_vars <-

c("mths_since_last_delinq", "mths_since_last_record",

"mths_since_last_major_derog")

loans <-

loans %>%

mutate_at(.vars = na_to_zero_vars, .funs = funs(replace(., is.na(.), 0)))These transformations should have us covered for now. We recreate the meta table after all these changes.

meta_loans <- funModeling::df_status(loans, print_results = FALSE)

meta_loans <-

meta_loans %>%

mutate(uniq_rat = unique / nrow(loans))5.2 Additional Meta Data File

Next we look at the meta descriptions provided in the additional Excel file.

knitr::kable(meta_loan_stats[,1:2])| LoanStatNew | Description |

|---|---|

| acc_now_delinq | The number of accounts on which the borrower is now delinquent. |

| acc_open_past_24mths | Number of trades opened in past 24 months. |

| addr_state | The state provided by the borrower in the loan application |

| all_util | Balance to credit limit on all trades |

| annual_inc | The self-reported annual income provided by the borrower during registration. |

| annual_inc_joint | The combined self-reported annual income provided by the co-borrowers during registration |

| application_type | Indicates whether the loan is an individual application or a joint application with two co-borrowers |

| avg_cur_bal | Average current balance of all accounts |

| bc_open_to_buy | Total open to buy on revolving bankcards. |

| bc_util | Ratio of total current balance to high credit/credit limit for all bankcard accounts. |

| chargeoff_within_12_mths | Number of charge-offs within 12 months |

| collection_recovery_fee | post charge off collection fee |

| collections_12_mths_ex_med | Number of collections in 12 months excluding medical collections |

| delinq_2yrs | The number of 30+ days past-due incidences of delinquency in the borrower’s credit file for the past 2 years |

| delinq_amnt | The past-due amount owed for the accounts on which the borrower is now delinquent. |

| desc | Loan description provided by the borrower |

| dti | A ratio calculated using the borrower’s total monthly debt payments on the total debt obligations, excluding mortgage and the requested LC loan, divided by the borrower’s self-reported monthly income. |

| dti_joint | A ratio calculated using the co-borrowers’ total monthly payments on the total debt obligations, excluding mortgages and the requested LC loan, divided by the co-borrowers’ combined self-reported monthly income |

| earliest_cr_line | The month the borrower’s earliest reported credit line was opened |

| emp_length | Employment length in years. Possible values are between 0 and 10 where 0 means less than one year and 10 means ten or more years. |

| emp_title | The job title supplied by the Borrower when applying for the loan.* |

| fico_range_high | The upper boundary range the borrower’s FICO at loan origination belongs to. |

| fico_range_low | The lower boundary range the borrower’s FICO at loan origination belongs to. |

| funded_amnt | The total amount committed to that loan at that point in time. |

| funded_amnt_inv | The total amount committed by investors for that loan at that point in time. |

| grade | LC assigned loan grade |

| home_ownership | The home ownership status provided by the borrower during registration or obtained from the credit report. Our values are: RENT, OWN, MORTGAGE, OTHER |

| id | A unique LC assigned ID for the loan listing. |

| il_util | Ratio of total current balance to high credit/credit limit on all install acct |

| initial_list_status | The initial listing status of the loan. Possible values are – W, F |

| inq_fi | Number of personal finance inquiries |

| inq_last_12m | Number of credit inquiries in past 12 months |

| inq_last_6mths | The number of inquiries in past 6 months (excluding auto and mortgage inquiries) |

| installment | The monthly payment owed by the borrower if the loan originates. |

| int_rate | Interest Rate on the loan |

| issue_d | The month which the loan was funded |

| last_credit_pull_d | The most recent month LC pulled credit for this loan |

| last_fico_range_high | The upper boundary range the borrower’s last FICO pulled belongs to. |

| last_fico_range_low | The lower boundary range the borrower’s last FICO pulled belongs to. |

| last_pymnt_amnt | Last total payment amount received |

| last_pymnt_d | Last month payment was received |

| loan_amnt | The listed amount of the loan applied for by the borrower. If at some point in time, the credit department reduces the loan amount, then it will be reflected in this value. |

| loan_status | Current status of the loan |

| max_bal_bc | Maximum current balance owed on all revolving accounts |

| member_id | A unique LC assigned Id for the borrower member. |

| mo_sin_old_il_acct | Months since oldest bank installment account opened |

| mo_sin_old_rev_tl_op | Months since oldest revolving account opened |

| mo_sin_rcnt_rev_tl_op | Months since most recent revolving account opened |

| mo_sin_rcnt_tl | Months since most recent account opened |

| mort_acc | Number of mortgage accounts. |

| mths_since_last_delinq | The number of months since the borrower’s last delinquency. |

| mths_since_last_major_derog | Months since most recent 90-day or worse rating |

| mths_since_last_record | The number of months since the last public record. |

| mths_since_rcnt_il | Months since most recent installment accounts opened |

| mths_since_recent_bc | Months since most recent bankcard account opened. |

| mths_since_recent_bc_dlq | Months since most recent bankcard delinquency |

| mths_since_recent_inq | Months since most recent inquiry. |

| mths_since_recent_revol_delinq | Months since most recent revolving delinquency. |

| next_pymnt_d | Next scheduled payment date |

| num_accts_ever_120_pd | Number of accounts ever 120 or more days past due |

| num_actv_bc_tl | Number of currently active bankcard accounts |

| num_actv_rev_tl | Number of currently active revolving trades |

| num_bc_sats | Number of satisfactory bankcard accounts |

| num_bc_tl | Number of bankcard accounts |

| num_il_tl | Number of installment accounts |

| num_op_rev_tl | Number of open revolving accounts |

| num_rev_accts | Number of revolving accounts |

| num_rev_tl_bal_gt_0 | Number of revolving trades with balance >0 |

| num_sats | Number of satisfactory accounts |

| num_tl_120dpd_2m | Number of accounts currently 120 days past due (updated in past 2 months) |

| num_tl_30dpd | Number of accounts currently 30 days past due (updated in past 2 months) |

| num_tl_90g_dpd_24m | Number of accounts 90 or more days past due in last 24 months |

| num_tl_op_past_12m | Number of accounts opened in past 12 months |

| open_acc | The number of open credit lines in the borrower’s credit file. |

| open_acc_6m | Number of open trades in last 6 months |

| open_il_12m | Number of installment accounts opened in past 12 months |

| open_il_24m | Number of installment accounts opened in past 24 months |

| open_il_6m | Number of currently active installment trades |

| open_rv_12m | Number of revolving trades opened in past 12 months |

| open_rv_24m | Number of revolving trades opened in past 24 months |

| out_prncp | Remaining outstanding principal for total amount funded |

| out_prncp_inv | Remaining outstanding principal for portion of total amount funded by investors |

| pct_tl_nvr_dlq | Percent of trades never delinquent |

| percent_bc_gt_75 | Percentage of all bankcard accounts > 75% of limit. |

| policy_code | publicly available policy_code=1 |

| new products not publicly availab | le policy_code=2 |

| pub_rec | Number of derogatory public records |

| pub_rec_bankruptcies | Number of public record bankruptcies |

| purpose | A category provided by the borrower for the loan request. |

| pymnt_plan | Indicates if a payment plan has been put in place for the loan |

| recoveries | post charge off gross recovery |

| revol_bal | Total credit revolving balance |

| revol_util | Revolving line utilization rate, or the amount of credit the borrower is using relative to all available revolving credit. |

| sub_grade | LC assigned loan subgrade |

| tax_liens | Number of tax liens |

| term | The number of payments on the loan. Values are in months and can be either 36 or 60. |

| title | The loan title provided by the borrower |

| tot_coll_amt | Total collection amounts ever owed |

| tot_cur_bal | Total current balance of all accounts |

| tot_hi_cred_lim | Total high credit/credit limit |

| total_acc | The total number of credit lines currently in the borrower’s credit file |

| total_bal_ex_mort | Total credit balance excluding mortgage |

| total_bal_il | Total current balance of all installment accounts |

| total_bc_limit | Total bankcard high credit/credit limit |

| total_cu_tl | Number of finance trades |

| total_il_high_credit_limit | Total installment high credit/credit limit |

| total_pymnt | Payments received to date for total amount funded |

| total_pymnt_inv | Payments received to date for portion of total amount funded by investors |

| total_rec_int | Interest received to date |

| total_rec_late_fee | Late fees received to date |

| total_rec_prncp | Principal received to date |

| total_rev_hi_lim | Total revolving high credit/credit limit |

| url | URL for the LC page with listing data. |

| verification_status | Indicates if income was verified by LC, not verified, or if the income source was verified |

| verified_status_joint | Indicates if the co-borrowers’ joint income was verified by LC, not verified, or if the income source was verified |

| zip_code | The first 3 numbers of the zip code provided by the borrower in the loan application. |

| NA | NA |

| NA | * Employer Title replaces Employer Name for all loans listed after 9/23/2013 |

As expected, id is “A unique LC assigned ID for the loan listing.” and member_id is “A unique LC assigned Id for the borrower member.”. The policy_code is “publicly available policy_code=1 new products not publicly available policy_code=2”. That means there could be different values but as we have seen before in this data it only takes on one value.

Finally, let’s look at variables that are either in the data and not in the meta description or vice versa. The dplyr::setdiff() function does what its name suggests, just pay attention to the order of arguments to understand which difference you actually get.

Variables in loans data but not in meta description.

dplyr::setdiff(colnames(loans), meta_loan_stats$LoanStatNew)## [1] "verification_status_joint" "total_rev_hi_lim"Variables in meta description but not in loans data.

dplyr::setdiff(meta_loan_stats$LoanStatNew, colnames(loans))## [1] "acc_open_past_24mths" "avg_cur_bal"

## [3] "bc_open_to_buy" "bc_util"

## [5] "chargeoff_within_12_mths" "delinq_amnt"

## [7] "fico_range_high" "fico_range_low"

## [9] "last_fico_range_high" "last_fico_range_low"

## [11] "mo_sin_old_il_acct" "mo_sin_old_rev_tl_op"

## [13] "mo_sin_rcnt_rev_tl_op" "mo_sin_rcnt_tl"

## [15] "mort_acc" "mths_since_recent_bc"

## [17] "mths_since_recent_bc_dlq" "mths_since_recent_inq"

## [19] "mths_since_recent_revol_delinq" "num_accts_ever_120_pd"

## [21] "num_actv_bc_tl" "num_actv_rev_tl"

## [23] "num_bc_sats" "num_bc_tl"

## [25] "num_il_tl" "num_op_rev_tl"

## [27] "num_rev_accts" "num_rev_tl_bal_gt_0"

## [29] "num_sats" "num_tl_120dpd_2m"

## [31] "num_tl_30dpd" "num_tl_90g_dpd_24m"

## [33] "num_tl_op_past_12m" "pct_tl_nvr_dlq"

## [35] "percent_bc_gt_75" "pub_rec_bankruptcies"

## [37] "tax_liens" "tot_hi_cred_lim"

## [39] "total_bal_ex_mort" "total_bc_limit"

## [41] "total_il_high_credit_limit" "total_rev_hi_lim "

## [43] "verified_status_joint" NAIt seems for the loans variables verification_status_joint and total_rev_hi_lim there are actually equivalents in the meta data but they carry slightly different names or have leading / trailing blanks. There are quite a few variables in the meta description that are not in the data. But that should be less of a concern as we don’t need those anyway.

6 Defining default

Our ultimate goal is the prediction of loan defaults from a given set of observations by selecting explanatory (independent) variables (also called feature in machine learning parlance) that result in an acceptable model performance as quantified by a pre-defined measure. This goal will also impact our exploratory data analysis. We will try to build some intuition about the data given our knowledge of the final goal. If we were given a data discovery task such as detecting interesting patterns via unsupervised learning methods we would probably perform a very different analysis.

For loans data the usual variable of interest is a delay or a default on required payments. So far we haven’t looked at any default variable because, in fact, there is no one variable indicating it. One may speculate about the reasons but a plausible explanation may be that the definiton of default depends on the perspective. A risk-averse person may classify any delay in scheduled payments immediately as default from day one while others may apply a step-wise approach considering that borrowers may pay at a later stage. Yet another classification may look at any rating deterioration and include a default as last grade in a rating scale.

Let’s look at potential variables that may indicate a default / delay in payments:

- loan_status: Current status of the loan

- delinq_2yrs: The number of 30+ days past-due incidences of delinquency in the borrower’s credit file for the past 2 years

- mths_since_last_delinq: The number of months since the borrower’s last delinquency.

We can look at the unique values of above variables by applying base::unique() via the purrr::map() function to each column of interest. The purrr library is part of the tidyverse set of libraries and applies functional paradigms via a level of abstraction.

default_vars <- c("loan_status", "delinq_2yrs", "mths_since_last_delinq")

purrr::map(.x = loans[, default_vars], .f = base::unique)## $loan_status

## [1] "Fully Paid"

## [2] "Charged Off"

## [3] "Current"

## [4] "Default"

## [5] "Late (31-120 days)"

## [6] "In Grace Period"

## [7] "Late (16-30 days)"

## [8] "Does not meet the credit policy. Status:Fully Paid"

## [9] "Does not meet the credit policy. Status:Charged Off"

## [10] "Issued"

##

## $delinq_2yrs

## [1] 0 2 3 1 4 6 5 8 7 9 11 NA 13 15 10 12 17 18 29 24 14 21 22

## [24] 19 16 30 26 20 27 39

##

## $mths_since_last_delinq

## [1] 0 35 38 61 8 20 18 68 45 48 41 40 74 25 53 39 10

## [18] 26 56 77 28 52 24 16 60 54 23 9 11 13 65 19 80 22

## [35] 59 79 44 64 57 14 63 49 15 73 70 29 51 5 75 55 2

## [52] 30 47 33 69 4 43 21 27 46 81 78 82 31 76 62 72 42

## [69] 50 3 12 67 36 34 58 17 71 66 32 6 37 7 1 83 86

## [86] 115 96 103 120 106 89 107 85 97 95 110 84 135 88 87 122 91

## [103] 146 134 114 99 93 127 101 94 102 129 113 139 131 156 143 109 119

## [120] 149 118 130 148 126 90 141 116 100 152 98 92 108 133 104 111 105

## [137] 170 124 136 180 188 140 151 159 121 123 157 112 154 171 142 125 117

## [154] 176 137We can see that delinq_2yrs shows only a few unique values which is a bit surprising as it could take many more values given its definition. The variable mths_since_last_delinq has some surprisingly large values. Both variables only indicate a delinquency in the past so they cannot help with the default definition.

The variable loan status seems to be an indicator of the current state a particular loan is in. We should also perform a count of the different values.

loans %>%

group_by(loan_status) %>%

summarize(count = n(), rel_count = count/nrow(loans)) %>%

knitr::kable()| loan_status | count | rel_count |

|---|---|---|

| Charged Off | 45248 | 0.0509906 |

| Current | 601779 | 0.6781533 |

| Default | 1219 | 0.0013737 |

| Does not meet the credit policy. Status:Charged Off | 761 | 0.0008576 |

| Does not meet the credit policy. Status:Fully Paid | 1988 | 0.0022403 |

| Fully Paid | 207723 | 0.2340860 |

| In Grace Period | 6253 | 0.0070466 |

| Issued | 8460 | 0.0095337 |

| Late (16-30 days) | 2357 | 0.0026561 |

| Late (31-120 days) | 11591 | 0.0130621 |

It is not immediately obvious what the different values stand for, so we refer to Lending Club’s documentation about “What do the different Note statuses mean?”

- Fully Paid: Loan has been fully repaid, either at the expiration of the 3- or 5-year year term or as a result of a prepayment.

- Current: Loan is up to date on all outstanding payments.

- Does not meet the credit policy. Status:Fully Paid: No explanation but see “fully paid”.

- Issued: New loan that has passed all Lending Club reviews, received full funding, and has been issued.

- Charged Off: Loan for which there is no longer a reasonable expectation of further payments. Generally, Charge Off occurs no later than 30 days after the Default status is reached. Upon Charge Off, the remaining principal balance of the Note is deducted from the account balance. Learn more about the difference between “default” and “charge off”.

- Does not meet the credit policy. Status:Charged Off: No explanation but see “Charged Off”

- Late (31-120 days): Loan has not been current for 31 to 120 days.

- In Grace Period: Loan is past due but within the 15-day grace period.

- Late (16-30 days): Loan has not been current for 16 to 30 days.

- Default: Loan has not been current for 121 days or more.

Given above information, we will define a default as follows.

defaulted <-

c("Default",

"Does not meet the credit policy. Status:Charged Off",

"In Grace Period",

"Late (16-30 days)",

"Late (31-120 days)")We now have to add an indicator variable to the data. We do this by reassigning the mutated data to the original object. An alternative would be to update the object via a compound assignment pipe-operator from the magrittr package magrittr::%<>% or an assignment in place := from the data.table package. We use a Boolean (True/False) indicator variable which will have nicer plotting properties (as it is treated like a character variable by the plotting library ggplot2) rather than a numerical value such as 1/0. R is usually clever enough to still allow calculations on Boolean values.

loans <-

loans %>%

mutate(default = ifelse(!(loan_status %in% defaulted), FALSE, TRUE))Given our new indicator variable, we can now compute the frequency of actual defaults in the training set. It is around 0.0249961.

loans %>%

summarise(default_freq = sum(default / n()))## # A tibble: 1 x 1

## default_freq

## <dbl>

## 1 0.02499608# alternatively in a table

table(loans$default) / nrow(loans)##

## FALSE TRUE

## 0.97500392 0.024996087 Removing variables deemed unfit for most modeling

As stated before some variables may actually have information value but are kicked out as we deem them unfit for most practical purposes. Arguably one would have to look at the actual value distribution as e.g. a high number of unique values may be non-sense values for only a few loans but we don’t dig deeper here.

We can get rid of following variables with given reason

- annual_inc_joint: high NA ratio

- dti_joint: high NA ratio

- verification_status_joint: high NA ratio

- policy_code: only one unique value -> standard deviation = 0

- id: key variable

- member_id: key variable

- emp_title: high amount of unique values

- url: high amount of unique values

- desc: high NA ratio

- title: high amount of unique values

- next_pymnt_d: high NA ratio

- open_acc_6m: high NA ratio

- open_il_6m: high NA ratio

- open_il_12m: high NA ratio

- open_il_24m: high NA ratio

- mths_since_rcnt_il: high NA ratio

- total_bal_il: high NA ratio

- il_util: high NA ratio

- open_rv_12m: high NA ratio

- open_rv_24m: high NA ratio

- max_bal_bc: high NA ratio

- all_util: high NA ratio

- total_rev_hi_lim: high NA ratio

- inq_fi: high NA ratio

- total_cu_tl: high NA ratio

- inq_last_12m: high NA ratio

vars_to_remove <-

c("annual_inc_joint", "dti_joint", "policy_code", "id", "member_id",

"emp_title", "url", "desc", "title", "open_acc_6m", "open_il_6m",

"open_il_12m", "open_il_24m", "mths_since_rcnt_il", "total_bal_il",

"il_util", "open_rv_12m", "open_rv_24m", "max_bal_bc", "all_util",

"total_rev_hi_lim", "inq_fi", "total_cu_tl", "inq_last_12m",

"verification_status_joint", "next_pymnt_d")

loans <- loans %>% select(-one_of(vars_to_remove))We further remove variables for different (stated) reasons

- sub_grade: contains same (but more granular) information as grade

- loan_status: has been used to define target variable

vars_to_remove <-

c("sub_grade", "loan_status")

loans <- loans %>% select(-one_of(vars_to_remove))8 A note on hypothesis generation vs. hypothesis confirmation

Before we start our analysis, we should be clear about its aim and what the data is used for. In the book “R for Data Science” the authors put it quite nicely under the name Hypothesis generation vs. hypothesis confirmation:

- Each observation can either be used for exploration or confirmation, not both.

- You can use an observation as many times as you like for exploration, but you can only use it once for confirmation. As soon as you use an observation twice, you’ve switched from confirmation to exploration. This is necessary because to confirm a hypothesis you must use data independent of the data that you used to generate the hypothesis. Otherwise you will be over optimistic. There is absolutely nothing wrong with exploration, but you should never sell an exploratory analysis as a confirmatory analysis because it is fundamentally misleading.

In a strict sense, this requires us to split the data into different sets. The authors go on suggesting a split:

- 60% of your data goes into a training (or exploration) set. You’re allowed to do anything you like with this data: visualise it and fit tons of models to it.

- 20% goes into a query set. You can use this data to compare models or visualisations by hand, but you’re not allowed to use it as part of an automated process.

- 20% is held back for a test set. You can only use this data ONCE, to test your final model.

This means that even for exploratory data analysis (EDA), we would only look at parts of the data. We will split the data into two sets with 80% train and 20% test ratio at random. As we are dealing with time-series data, we could also split the data by time. But time itself may be an explanatory variable which could be modeled. All exploratory analysis will be performed on the training data only. We will use base::set.seed() to make the random split reproducible. You can have an argument whether it is sensible to even use split data for EDA but EDA usually builds your intuition about the data and thus will shape data transformation and model decisions. The test data allows for testing all these assumptions in addition to the actual model performance. There are other methods such as cross validation which do not necessarily require a test data set but we have enough observations to afford one.

One note of caution is necessary here. Since not all data is used for model fitting, the test data may have labels that do not occur in the training set and with same rationale feautures may have unseen values. In addition, the data is imbalanced, i.e. only a few lenders default while many more do not. The last fact may actually require a non-random split considering the class label (default / non-default). The same may hold true for the features (independent variables). For more details on dealing with imbalanced data, see Learning from Imbalanced Classes or Learning from Imbalanced Data. Tom Fawcett puts it nicely in previous first mentioned blog post:

Conventional algorithms are often biased towards the majority class because their loss functions attempt to optimize quantities such as error rate, not taking the data distribution into consideration. In the worst case, minority examples are treated as outliers of the majority class and ignored. The learning algorithm simply generates a trivial classifier that classifies every example as the majority class.

We can do the split manually (see commented out code) but in order to ensure class distributions within the split data, we use function createDataPartition() from the caret package which performs stratified sampling. For details on the function, see The caret package: 4.1 Simple Splitting Based on the Outcome.

# ## manual approach

# # 80% of the sample size

# smp_size <- floor(0.8 * nrow(loans))

#

# # set the seed to make your partition reproductible

# set.seed(123)

# train_index <- sample(seq_len(nrow(loans)), size = smp_size)

#

# train <- loans[train_index, ]

# test <- loans[-train_index, ]

## with caret

set.seed(6438)

train_index <-

caret::createDataPartition(y = loans$default, times = 1,

p = .8, list = FALSE)

train <- loans[train_index, ]

test <- loans[-train_index, ]9 Exploratory Data Analysis

There are many excellent resources on exploratory analysis, e.g.

A visual look at the data should always precede any model considerations. A useful visualization library is ggplot2 (which is part of the tidyverse and further on also referred to as ggplot) that requires a few other libraries on top for extensions such as scales. We are not after publication-ready visualizations yet as this phase is considered data exploration or data discovery rather than results reporting. Nontheless, used visualization libraries already produce visually appealing graphics as they use smart heuristics and default values to guess sensible parameter settings.

library(scales)The most important questions around visualization are which variables are numeric and if so are they continous or discrete and which are strings. Furthermore, which variables are attributes (categorical) and which make up sensible metric-attribute pairs. An important information for efficient visualization with categorical variables is also the amount of unique values they can take and the ratio of zero or missing values, both which were already analyzed in section Meta Data. In a first exploratory analysis we aim for categorical variables that have high information power and not too many unique values to keep the information density at a manageable level. Also consider group sizes and differences between median and mean driven by outliers. Especially when drawing conclusions from summarized / aggregated information, we should be aware of group size. We thus may add this info directly in the plot or look at it before plotting in a grouped table.

A generally good idea is to look at the distributions of relevant continous variables. These are probably

- loan_amnt

- funded_amnt

- funded_amnt_inv

- annual_inc

Among the target variable default some interesting categorical variables might be

- term

- int_rate

- grade

- emp_title

- home_ownership

- verification_status

- issue_d (timestamp)

- loan_status

- purpose

- zip_code (geo-info)

- addr_state (geo-info)

- application_type

Assign continous variables to a character vector and reshape data for plotting of distributions.

income_vars <- c("annual_inc")

loan_amount_vars <- c("loan_amnt", "funded_amnt", "funded_amnt_inv")We can reshape and plot the original data for specified variables in a tidyr-dplyr-ggplot pipe. For details on tidying with tidyr, see Data Science with R - Data Tidying or Data Processing with dplyr & tidyr.

train %>%

select_(.dots = income_vars) %>%

gather_("variable", "value", gather_cols = income_vars) %>%

ggplot(aes(x = value)) +

facet_wrap(~ variable, scales = "free_x", ncol = 3) +

geom_histogram()## `stat_bin()` using `bins = 30`. Pick better value with `binwidth`.## Warning: Removed 4 rows containing non-finite values (stat_bin).

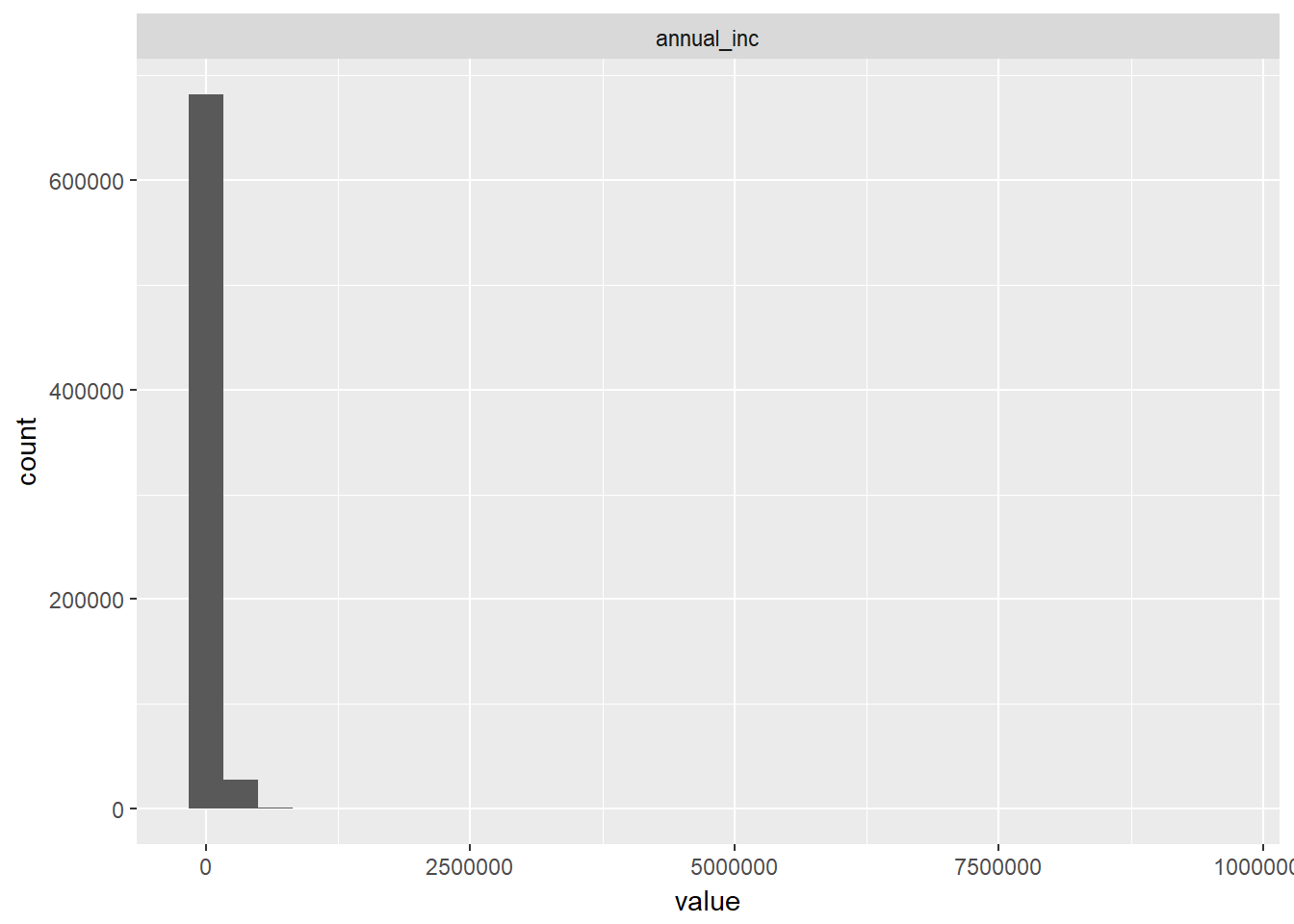

We can see that a lot of loans have corresponding annual income of zero and in general income seems low. As already known, joint income has a large number of NA values (i.e. cannot be displayed) and those few values that are present do not seem to have significant exposure. Most loan applications must have been submitted by single income borrowers, i.e. either single persons or single-income households.

train %>%

select_(.dots = loan_amount_vars) %>%

gather_("variable", "value", gather_cols = loan_amount_vars) %>%

ggplot(aes(x = value)) +

facet_wrap(~ variable, scales = "free_x", ncol = 3) +

geom_histogram()## `stat_bin()` using `bins = 30`. Pick better value with `binwidth`.

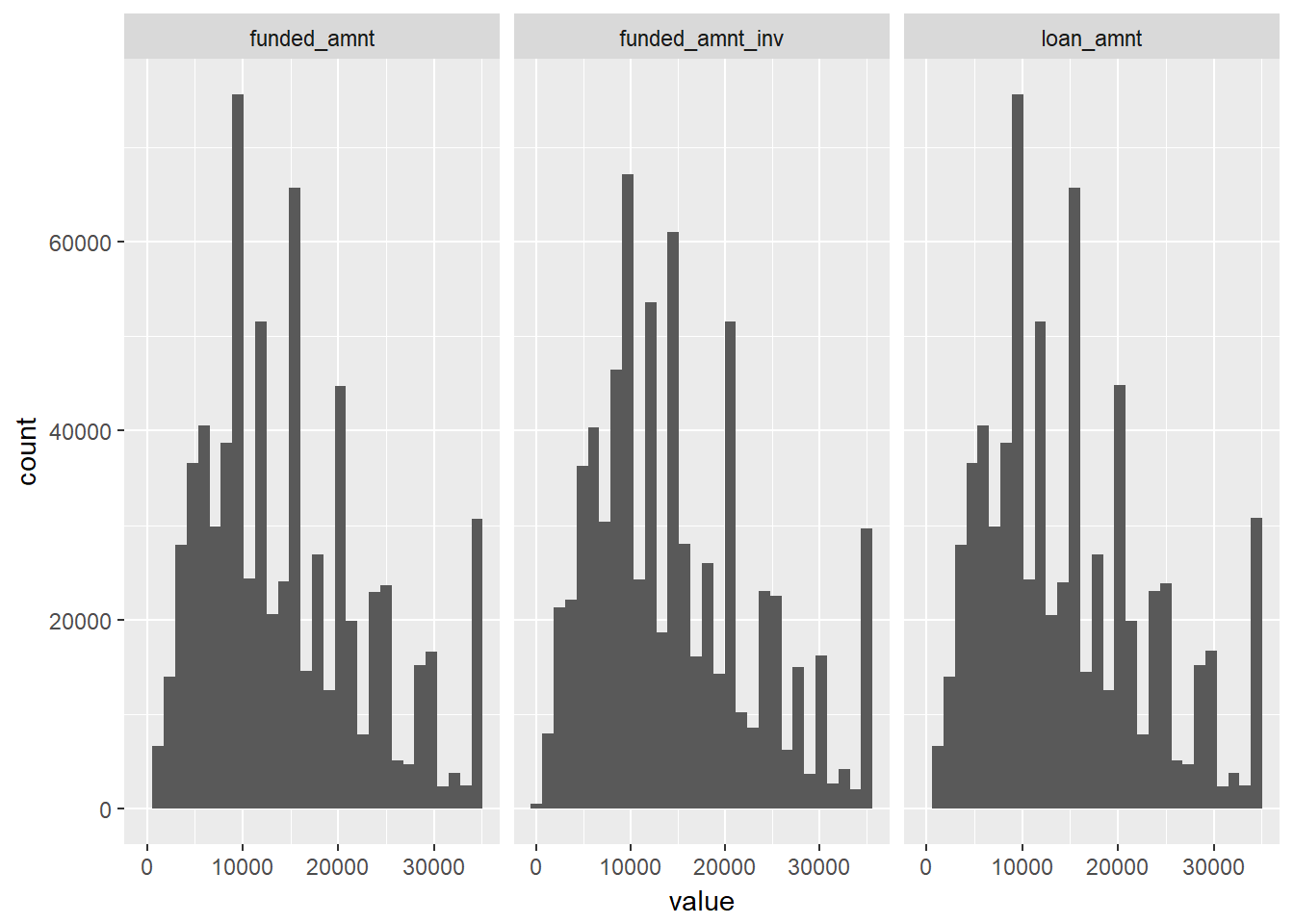

The loan amount distributions seems similar in shape suggesting not too much divergence between the loan amount applied for, the amount committed and the amount invested.

We had already identified a number of interesting categorical variables. Let’s combine our selection with the meta information gathered in an earlier stage to see the information power and uniqueness.

categorical_vars <-

c("term", "grade", "sub_grade", "emp_title", "home_ownership",

"verification_status", "loan_status", "purpose", "zip_code",

"addr_state", "application_type", "policy_code")

meta_loans %>%

select(variable, p_zeros, p_na, type, unique) %>%

filter_(~ variable %in% categorical_vars) %>%

knitr::kable()| variable | p_zeros | p_na | type | unique |

|---|---|---|---|---|

| term | 0 | 0.0 | character | 2 |

| grade | 0 | 0.0 | character | 7 |

| sub_grade | 0 | 0.0 | character | 35 |

| emp_title | 0 | 5.8 | character | 289148 |

| home_ownership | 0 | 0.0 | character | 6 |

| verification_status | 0 | 0.0 | character | 3 |

| loan_status | 0 | 0.0 | character | 10 |

| purpose | 0 | 0.0 | character | 14 |

| zip_code | 0 | 0.0 | character | 935 |

| addr_state | 0 | 0.0 | character | 51 |

| policy_code | 0 | 0.0 | numeric | 1 |

| application_type | 0 | 0.0 | character | 2 |

This gives us some ideas on what may be useful for a broad data exploration. Variables such as emp_title have too many unique values to be suitable for a classical categorical graph. Other variables may lend themselves to pairwise or correlation graphs such as int_rate while again others may be used in time series plots such as issue_d. We may even be able to plot some appealing geographical plots with geolocation variables such as zip_code or addr_state.

9.1 Grade

For a start, let’s look at grade which seems to be a rating classification scheme that Lending Club uses to assign loans into risk buckets similar to other popular rating schemes like S&P or Moodys. For more details, see Lending Club Rate Information. For now, it suffices to know that grades take values A, B, C, D, E, F, G where A represents the highest quality loan and G the lowest.

As a first relation, we investigate the distribution of loan amount over the different grades with a standard boxplot highlighting potential outliers in red. One may also select a pre-defined theme from the ggthemes package by adding a call to ggplot such as theme_economist() or even create a theme oneself. For details on themes, see ggplot2 themes and Introduction to ggthemes.

As mentioned before we want to be group size aware so let’s write a short function to be used inside the ggplot::geom_boxplot() call in combination with ggplot::stat_summary() where we can call user-defined functions using parameter fun.data. As it is often the case with plots, we need to experiment with the position of additional text elements which we achieve by scaling the y-position with some constant multiplier around the median (as the boxplot will have the median as horizontal bar within the rectangles). The mean can be added in a similar fashion, however we don’t need to specify the y-position explicitly. The count as text and the mean as point may overlap themselves and with the median horizontal bar. This is acceptable in an exploratory setting. For publication-ready plots one would have to perform some adjustments.

# see http://stackoverflow.com/questions/15660829/how-to-add-a-number-of-observations-per-group-and-use-group-mean-in-ggplot2-boxp

give_count <-

stat_summary(fun.data = function(x) return(c(y = median(x)*1.06,

label = length(x))),

geom = "text")

# see http://stackoverflow.com/questions/19876505/boxplot-show-the-value-of-mean

give_mean <-

stat_summary(fun.y = mean, colour = "darkgreen", geom = "point",

shape = 18, size = 3, show.legend = FALSE)train %>%

ggplot(aes(grade, loan_amnt)) +

geom_boxplot(fill = "white", colour = "darkblue",

outlier.colour = "red", outlier.shape = 1) +

give_count +

give_mean +

scale_y_continuous(labels = comma) +

facet_wrap(~ default) +

labs(title="Loan Amount by Grade", x = "Grade", y = "Loan Amount \n")

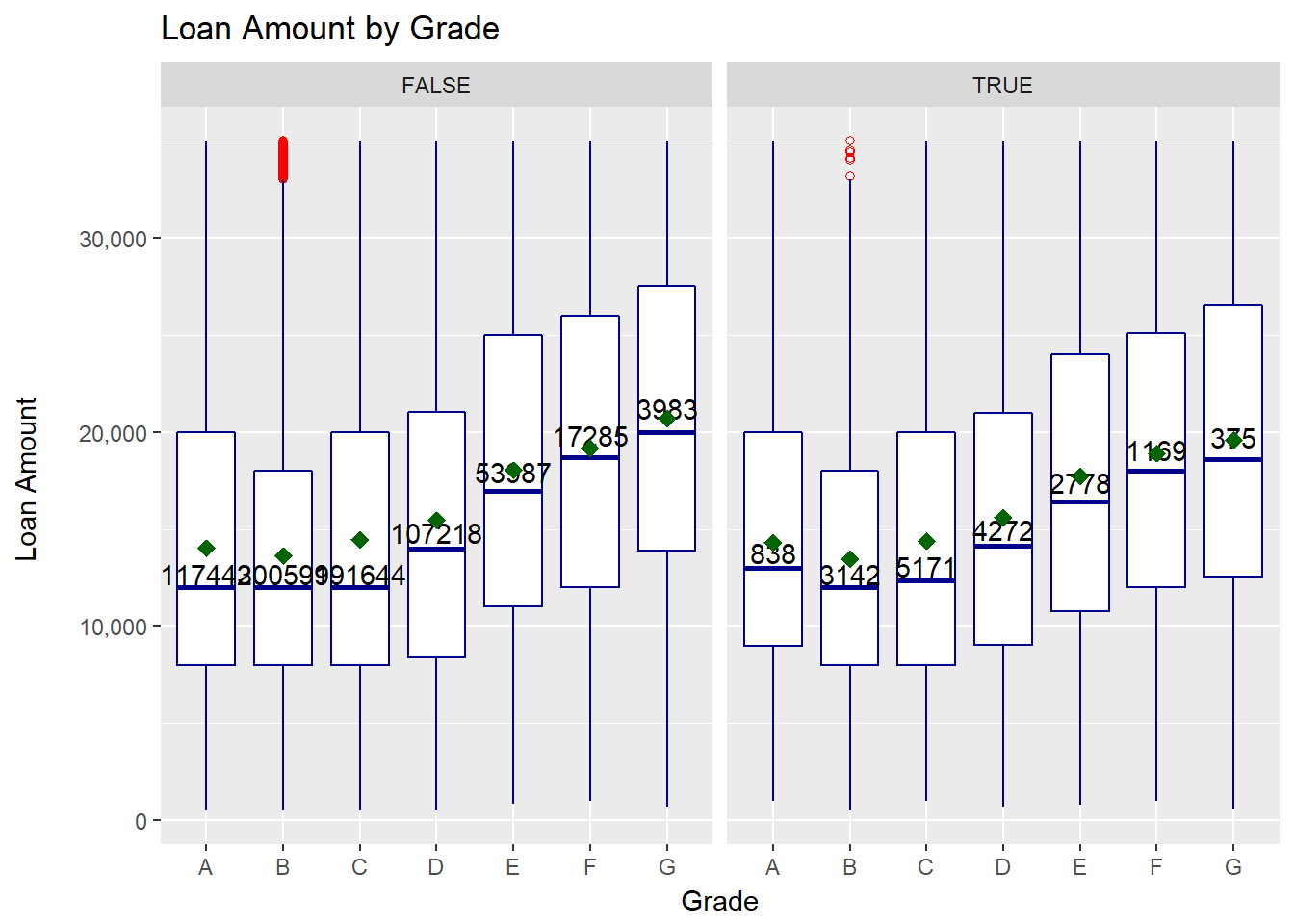

We can derive a few points from the plot:

- there is not a lot of difference between default and non-default

- lower quality loans tend to have a higher loan amount

- there are virtually no outliers except for grade B

- the loan amount spread (IQR) seems to be slightly higher for lower quality loans

According to Lending Club’s rate information, we would expect a strong increasing relationship between grade and interest rate so we can test this. We also consider the term of the loan as one might expect that longer term loans could have a higher interest rate.

train %>%

ggplot(aes(grade, int_rate)) +

geom_boxplot(fill = "white", colour = "darkblue",

outlier.colour = "red", outlier.shape = 1) +

give_count +

give_mean +

scale_y_continuous(labels = comma) +

labs(title="Interest Rate by Grade", x = "Grade", y = "Interest Rate \n") +

facet_wrap(~ term)

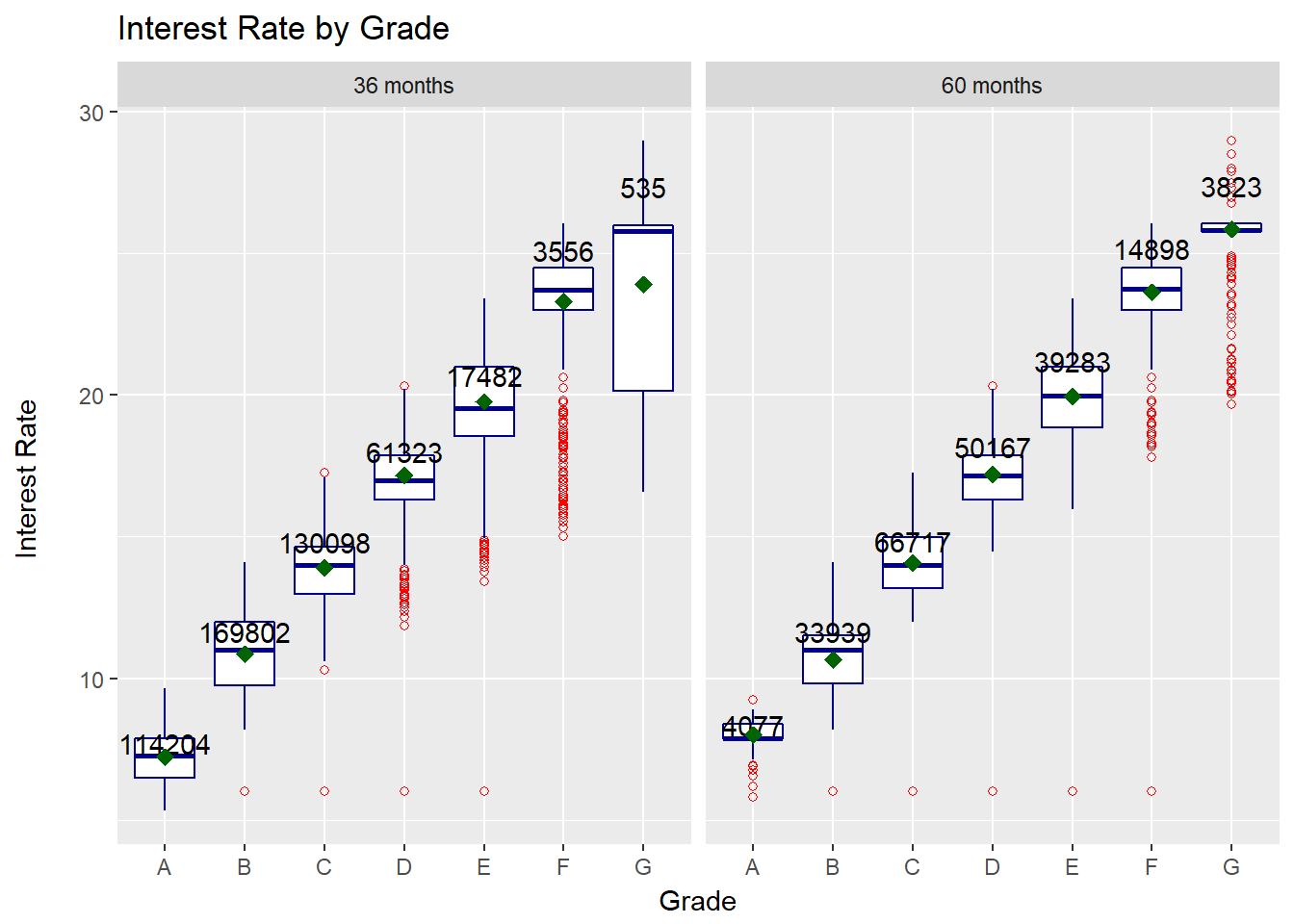

We can derive a few points from the plot:

- interest rate increases with grade worsening

- a few loans seem to have an equally low interest rate independent of grade

- the spread of rates seems to increase with grade worsening

- there tend to be more outliers on the lower end of the rate

- The 3-year term has a much higher number of high-rated borrowers while the 5-year term has a larger number in the low-rating grades

9.2 Home Ownership

We can do the same for home ownership.

train %>%

ggplot(aes(home_ownership, int_rate)) +

geom_boxplot(fill = "white", colour = "darkblue",

outlier.colour = "red", outlier.shape = 1) +

give_count +

give_mean +

scale_y_continuous(labels = comma) +

facet_wrap(~ default) +

labs(title="Interest Rate by Home Ownership", x = "Home Ownership", y = "Interest Rate \n")

We can derive a few points from the plot:

- there seems no immediate conclusion with respect to the impact of home ownership, e.g. we can see that median/mean interest rate is higher for people who own a house than for those who still pay a mortgage.

- interest rates are highest for values “any” and “none” which could be loans of limited data quality but there are very few data points.

- interest rate is higher for default loans, which is probably driven by other factors (e.g. grade)

9.3 Loan Amount and Income

Another interesting plot may be the relationship between loan amount and funded / invested amount. As all variables are continous, we can do that with a simple scatterplot but we will need to reshape the data to have both loan values plotted against loan amount.

In fact the reshaping here may be slightly odd as we like to display the same variable on the x-axis but different values on the y-axis facetted by their variable names. To achieve that, we gather the data three times with the same x variable but changing y variables.

funded_amnt <-

train %>%

transmute(loan_amnt = loan_amnt, value = funded_amnt,

variable = "funded_amnt")

funded_amnt_inv <-

train %>%

transmute(loan_amnt = loan_amnt, value = funded_amnt_inv,

variable = "funded_amnt_inv")

plot_data <- rbind(funded_amnt, funded_amnt_inv)

# remove unnecessary data using regex

ls()## [1] "categorical_vars" "chr_to_date_vars" "chr_to_num_vars"

## [4] "convert_date" "default_vars" "defaulted"

## [7] "excel_file" "funded_amnt" "funded_amnt_inv"

## [10] "give_count" "give_mean" "i"

## [13] "income_vars" "libraries_missing" "libraries_used"

## [16] "loan_amount_vars" "loans" "meta_browse_notes"

## [19] "meta_loan_stats" "meta_loans" "meta_reject_stats"

## [22] "na_to_zero_vars" "num_vars" "path"

## [25] "plot_data" "test" "train"

## [28] "train_index" "vars_to_remove"rm(list = ls()[grep("^funded", ls())])Now let’s plot the data.

plot_data %>%

ggplot(aes(x = loan_amnt, y = value)) +

facet_wrap(~ variable, scales = "free_x", ncol = 3) +

geom_point()

We can derive a few points from the plot:

- there are instances when funded amount is smaller loan amount

- there seems to be a number of loans where investment is smaller funded amount i.e. not the full loan is invested in

Let’s do the same but only for annual income versus loan amount.

train %>%

ggplot(aes(x = annual_inc, y = loan_amnt)) +

geom_point()## Warning: Removed 4 rows containing missing values (geom_point).

We can derive a few points from the plot:

- there is no immediatly discernible relationship

- there are quite a few income outliers with questionable values (e.g. why would a person with annual income 9500000 request a loan amount of 24000)

9.4 Time Series

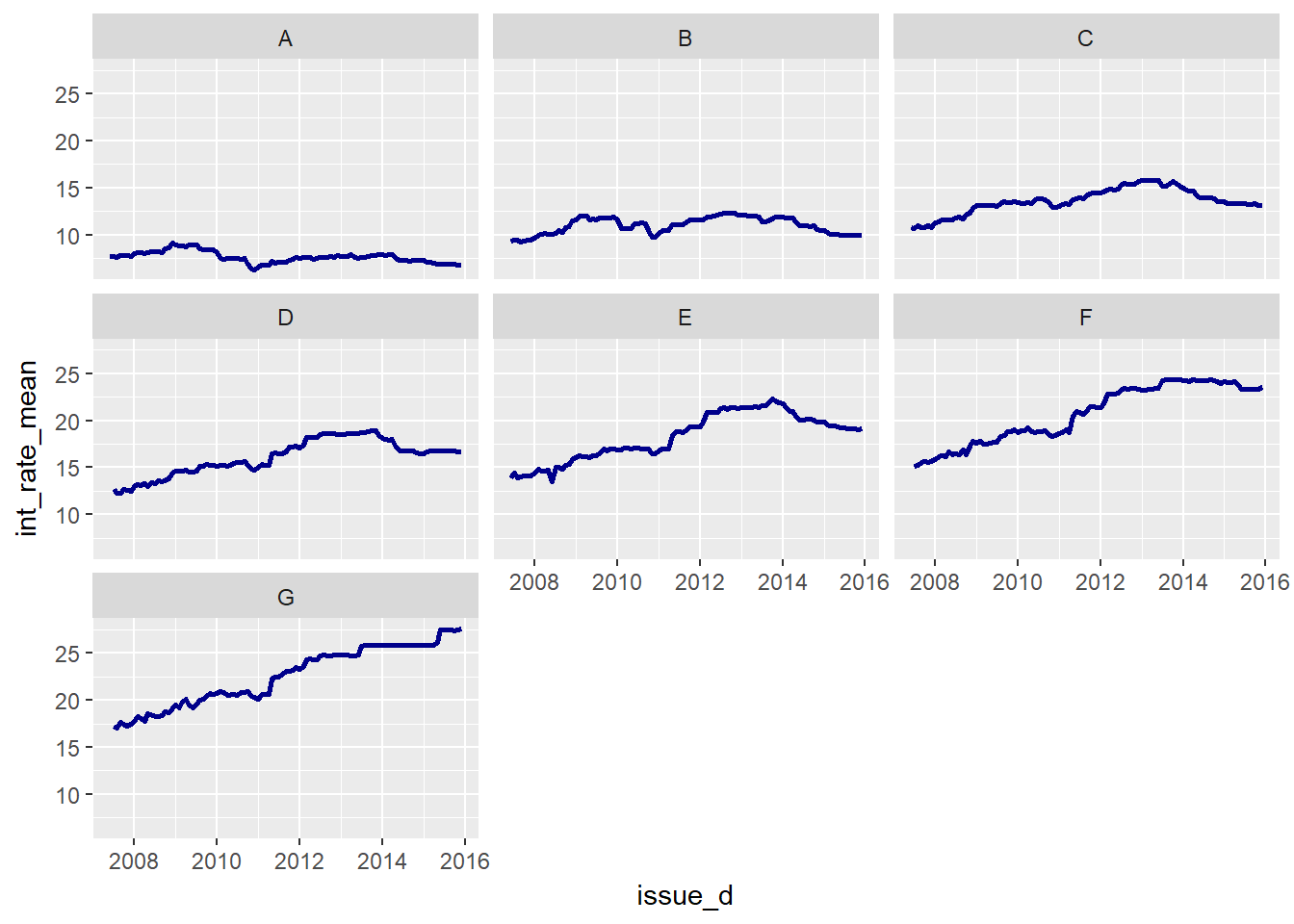

Now let’s take a look at interest rates over time but split the time series by grade to see if there are differences in interest rate development depending on the borrower grade. Again we make use of a dplyr pipe, first selecting the variables of interest, then grouping by attributes and finally summarising the metric interest rate by taking the mean for each goup. We use facet_wrap to split by attribute grade. As we are using the mean for building an aggregate representation, we should be weary of outliers and group size which we had already looked at earlier (ignoring time dimension).

train %>%

select(int_rate, grade) %>%

group_by(grade) %>%

summarise(int_rate_mean = mean(int_rate, na.rm = TRUE),

int_rate_median = median(int_rate, na.rm = TRUE),

n = n()) %>%

knitr::kable()| grade | int_rate_mean | int_rate_median | n |

|---|---|---|---|

| A | 7.244639 | 7.26 | 118281 |

| B | 10.830884 | 10.99 | 203741 |

| C | 13.979427 | 13.98 | 196815 |

| D | 17.178566 | 16.99 | 111490 |

| E | 19.899484 | 19.99 | 56765 |

| F | 23.572306 | 23.76 | 18454 |

| G | 25.624502 | 25.83 | 4358 |

Group size are relatively large except for grade G which may have only a few hundred loans per group when grouping by grade and date. Mean and median seem fairly close at this non-granular level. Let’s plot the data.

train %>%

select(int_rate, grade, issue_d) %>%

group_by(grade, issue_d) %>%

summarise(int_rate_mean = mean(int_rate, na.rm = TRUE)) %>%

ggplot(aes(issue_d, int_rate_mean)) +

geom_line(color= "darkblue", size = 1) +

facet_wrap(~ grade)

We can derive a few points from the plot:

- the mean interest rate is falling or relatively constant for high-rated clients

- the mean interest rate is increasing significantly for low-rated clients

Let’s also look at loan amounts over time in the same manner.

train %>%

select(loan_amnt, grade, issue_d) %>%

group_by(grade, issue_d) %>%

summarise(loan_amnt_mean = mean(loan_amnt, na.rm = TRUE)) %>%

ggplot(aes(issue_d, loan_amnt_mean)) +

geom_line(color= "darkblue", size = 1) +

facet_wrap(~ grade)

We can derive a few points from the plot:

- the mean loan amount is increasing for all grades

- while high-rated clients have some mean loan amount volatility, it is much higher for low-rated clients

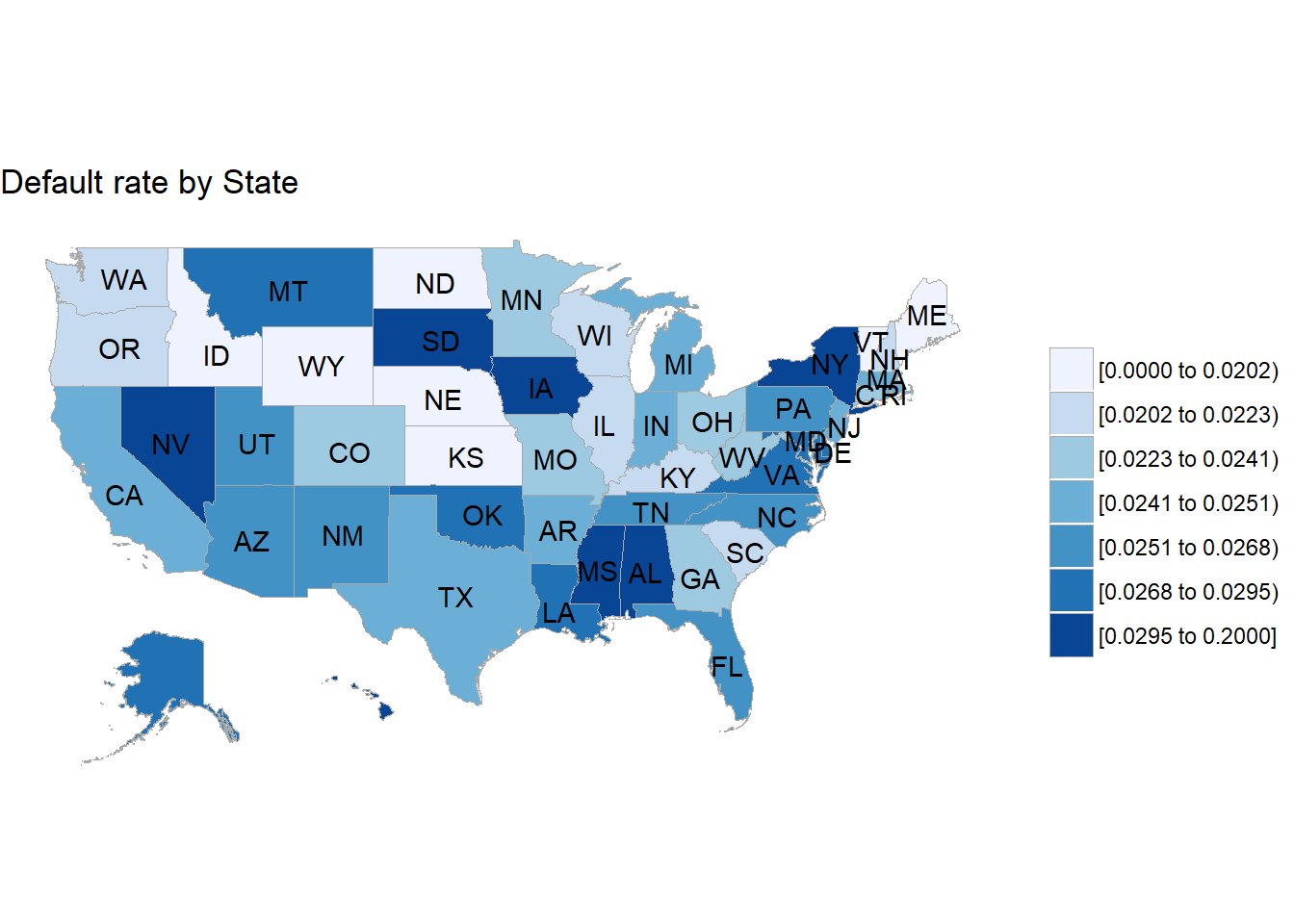

9.5 Geolocation Plots

Let’s remind ourselves of the geolocation variables in the data and their information power.

- zip_code (geo-info)

- addr_state (geo-info)

geo_vars <- c("zip_code", "addr_state")

meta_loans %>%

select(variable, p_zeros, p_na, type, unique) %>%

filter_(~ variable %in% geo_vars) %>%

knitr::kable()| variable | p_zeros | p_na | type | unique |

|---|---|---|---|---|

| zip_code | 0 | 0 | character | 935 |

| addr_state | 0 | 0 | character | 51 |

loans %>%

select_(.dots = geo_vars) %>%

str()## Classes 'tbl_df', 'tbl' and 'data.frame': 887379 obs. of 2 variables:

## $ zip_code : chr "860xx" "309xx" "606xx" "917xx" ...

## $ addr_state: chr "AZ" "GA" "IL" "CA" ...We see that zip_code seems to be the truncated US postal code with only first three digits having a value. The addr_state seems to be the state names in a two-letter abbreviation.

We can use the choroplethr package to work with maps (alternatives may be maps among other packages). While choroplethr provides functions to work with the data, its sister package choroplethrMaps contains corresponding maps that can be used by choroplethr. One issue with choroplethr is that it attaches the package plyr which means many functions from dplyr are masked as it is the successor to plyr. Thus we do not load the package choroplethr despite using its functions frequently. However, we need to load choroplethrMaps as it has some data that is not exported from its namespace so syntax like choroplethrMaps::state.map where state.map is the rdata file does not work. An alternative might be to load the file directly by going to the underlying directory, e.g. R-3.x.x\library\choroplethrMaps\data but this is cumbersome. As discussed before, when attaching a library, we just need to make sure that important functions of other packages are not masked. In this case it’s fine.

For details on those libraries, see CRAN choroplethr and CRAN choroplethrMaps.

First we aggregate the default rate by state.

default_rate_state <-

train %>%

select(default, addr_state) %>%

group_by(addr_state) %>%

summarise(default_rate = sum(default, na.rm = TRUE) / n())

knitr::kable(default_rate_state)| addr_state | default_rate |

|---|---|

| AK | 0.0289280 |

| AL | 0.0300944 |

| AR | 0.0243994 |

| AZ | 0.0260203 |

| CA | 0.0247008 |

| CO | 0.0233654 |

| CT | 0.0222655 |

| DC | 0.0131646 |

| DE | 0.0291604 |

| FL | 0.0267678 |

| GA | 0.0238065 |

| HI | 0.0303441 |

| IA | 0.2000000 |

| ID | 0.0000000 |

| IL | 0.0201743 |

| IN | 0.0242243 |

| KS | 0.0162975 |

| KY | 0.0204172 |

| LA | 0.0268172 |

| MA | 0.0246096 |

| MD | 0.0279492 |

| ME | 0.0023474 |

| MI | 0.0249429 |

| MN | 0.0239122 |

| MO | 0.0222711 |

| MS | 0.0301540 |

| MT | 0.0289296 |

| NC | 0.0263557 |

| ND | 0.0075377 |

| NE | 0.0156087 |

| NH | 0.0206490 |

| NJ | 0.0249566 |

| NM | 0.0264766 |

| NV | 0.0303213 |

| NY | 0.0294889 |

| OH | 0.0225960 |

| OK | 0.0283897 |

| OR | 0.0209814 |

| PA | 0.0256838 |

| RI | 0.0245849 |

| SC | 0.0218611 |

| SD | 0.0301164 |

| TN | 0.0267720 |

| TX | 0.0240810 |

| UT | 0.0250696 |

| VA | 0.0279697 |

| VT | 0.0172771 |

| WA | 0.0216223 |

| WI | 0.0213508 |

| WV | 0.0225434 |

| WY | 0.0179455 |

The data is already in a good format but when using choroplethr we need to adhere to some conventions to make it work out of the box. The first thing would be to bring the state names into a standard format. In fact, we can investigate the format by looking e.g. into the choroplethrMaps::state.map dataset which we later use to map our data. We first load the library and then the data via the function utils::data(). From the documentation, we also learn that state.map is a “data.frame which contains a map of all 50 US States plus the District of Columbia.” It is based on a shapefile which is often used in geospatial visualizations and “taken from the US Census 2010 Cartographic Boundary shapefiles page”. We are interested in the region variable which seems to hold the state names.

library(choroplethrMaps)

utils::data(state.map)

str(state.map)## 'data.frame': 50763 obs. of 12 variables:

## $ long : num -113 -113 -112 -112 -111 ...

## $ lat : num 37 37 37 37 37 ...

## $ order : int 1 2 3 4 5 6 7 8 9 10 ...

## $ hole : logi FALSE FALSE FALSE FALSE FALSE FALSE ...

## $ piece : Factor w/ 66 levels "1","2","3","4",..: 1 1 1 1 1 1 1 1 1 1 ...

## $ group : Factor w/ 226 levels "0.1","1.1","10.1",..: 1 1 1 1 1 1 1 1 1 1 ...

## $ id : chr "0" "0" "0" "0" ...

## $ GEO_ID : Factor w/ 51 levels "0400000US01",..: 3 3 3 3 3 3 3 3 3 3 ...

## $ STATE : Factor w/ 51 levels "01","02","04",..: 3 3 3 3 3 3 3 3 3 3 ...

## $ region : chr "arizona" "arizona" "arizona" "arizona" ...

## $ LSAD : Factor w/ 0 levels: NA NA NA NA NA NA NA NA NA NA ...

## $ CENSUSAREA: num 113594 113594 113594 113594 113594 ...unique(state.map$region)## [1] "arizona" "arkansas" "louisiana"

## [4] "minnesota" "mississippi" "montana"

## [7] "new mexico" "north dakota" "oklahoma"

## [10] "pennsylvania" "tennessee" "virginia"

## [13] "california" "delaware" "west virginia"

## [16] "wisconsin" "wyoming" "alabama"

## [19] "alaska" "florida" "idaho"

## [22] "kansas" "maryland" "colorado"

## [25] "new jersey" "north carolina" "south carolina"

## [28] "washington" "vermont" "utah"

## [31] "iowa" "kentucky" "maine"

## [34] "massachusetts" "connecticut" "michigan"

## [37] "missouri" "nebraska" "nevada"

## [40] "new hampshire" "new york" "ohio"

## [43] "oregon" "rhode island" "south dakota"

## [46] "district of columbia" "texas" "georgia"

## [49] "hawaii" "illinois" "indiana"So it seems we need small case long form state names which implies we need a mapping from the short names in our data. Some internet research may give us the site American National Standards Institute (ANSI) Codes for States where we find a mapping under section FIPS Codes for the States and the District of Columbia. We could try using some parsing tool to extract the table from the web page but for now we take a short cut and simply copy paste the data into a tab-delimited txt file and read the file with readr::read_tsv(). We then cross-check if all abbreviations used in our loans data are present in the newly created mapping table.

states <- readr::read_tsv("./data//us_states.txt")## Parsed with column specification:

## cols(

## Name = col_character(),

## `FIPS State Numeric Code` = col_integer(),

## `Official USPS Code` = col_character()

## )str(states)## Classes 'tbl_df', 'tbl' and 'data.frame': 51 obs. of 3 variables:

## $ Name : chr "Alabama" "Alaska" "Arizona" "Arkansas" ...

## $ FIPS State Numeric Code: int 1 2 4 5 6 8 9 10 11 12 ...

## $ Official USPS Code : chr "AL" "AK" "AZ" "AR" ...

## - attr(*, "spec")=List of 2

## ..$ cols :List of 3

## .. ..$ Name : list()

## .. .. ..- attr(*, "class")= chr "collector_character" "collector"

## .. ..$ FIPS State Numeric Code: list()

## .. .. ..- attr(*, "class")= chr "collector_integer" "collector"